Massimiliano Mancini

Assistant Professor,

University of Trento

Trento, Italy

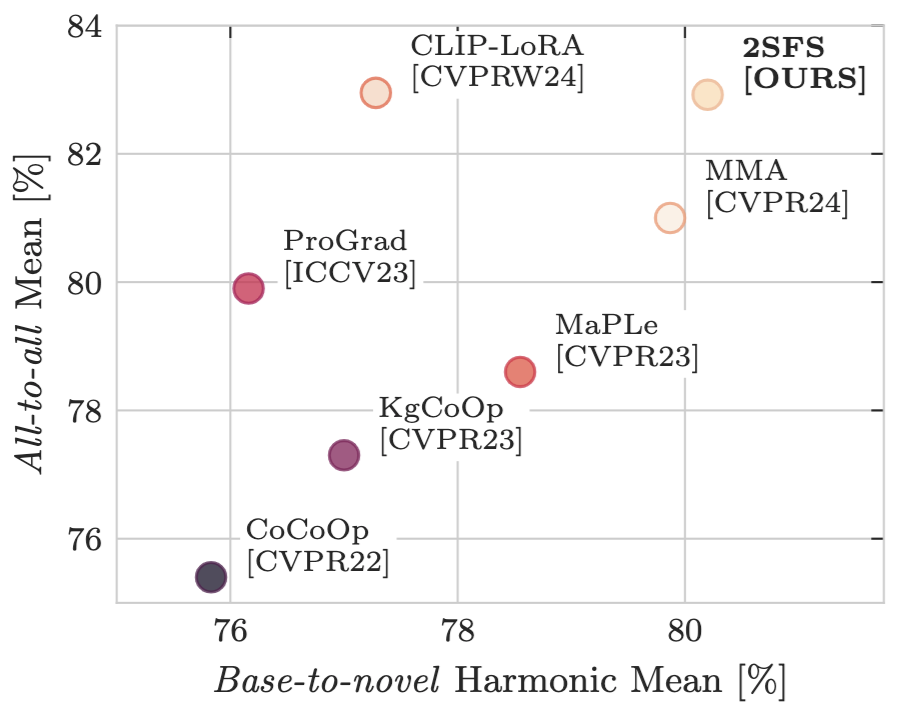

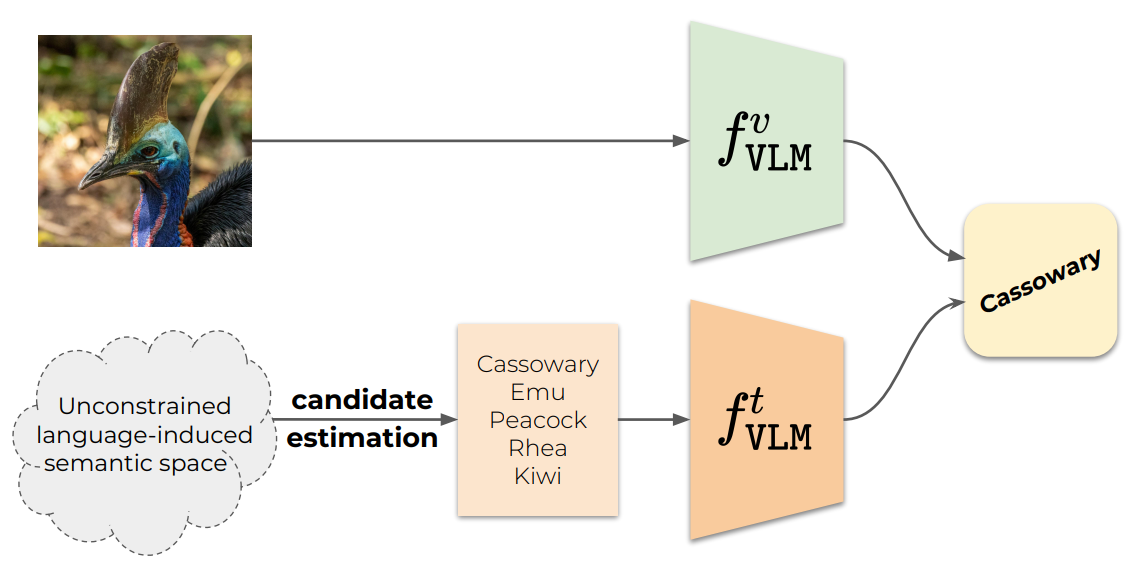

Hello! I am Massimiliano (Massi) a tenure-track Assistant Professor (RTD-b) at the Department of Information Engineering and Computer Science of University of Trento. The goal of my work is developing algorithms for increasing the generalization capabilities of deep architectures to new visual domains and semantic concepts, focusing on problems such as transfer learning, trustworthiness, and compositionality in computer vision. I am a member of ELLIS.

If you come to Italy, visit my wonderful medieval hometown Monte Santa Maria Tiberina!

news

| Jun 26, 2025 | We got 2 papers accepted at ICCV 2025! 😁 Congrats to Alessandro (“open-world” evaluation of multimodal LLMs) and Deepayan (training-free personalization)! |

|---|---|

| May 15, 2025 | I started my new position as a tenure-track assistant professor (RTDb) here at the Department of Information Engineering and Computer Science (DISI) of the University of Trento. Thanks to all who supported me during this journey! I am excited about the next steps and, if you are interested in joining this new journey, just drop me an email! |

| Apr 10, 2025 | I will co-organize the |

| Mar 28, 2025 | I will serve as Area Chair for NeurIPS 2025, in the Datasets and Benchmarks Track. |

| Feb 27, 2025 | We came back stronger: 5/7 papers accepted at CVPR 2025! 😁 Congrats to Davide, Luca, Marco, Matteo, and Quentin! A pity for the two rejected ones, but ICCV is just around the corner… |