publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

-

Test-time Vocabulary Adaptation for Language-driven Object DetectionMingxuan Liu , Tayler L. Hayes , Massimiliano Mancini, and 3 more authorsIn IEEE International Conference on Image Processing (ICIP) , 2025

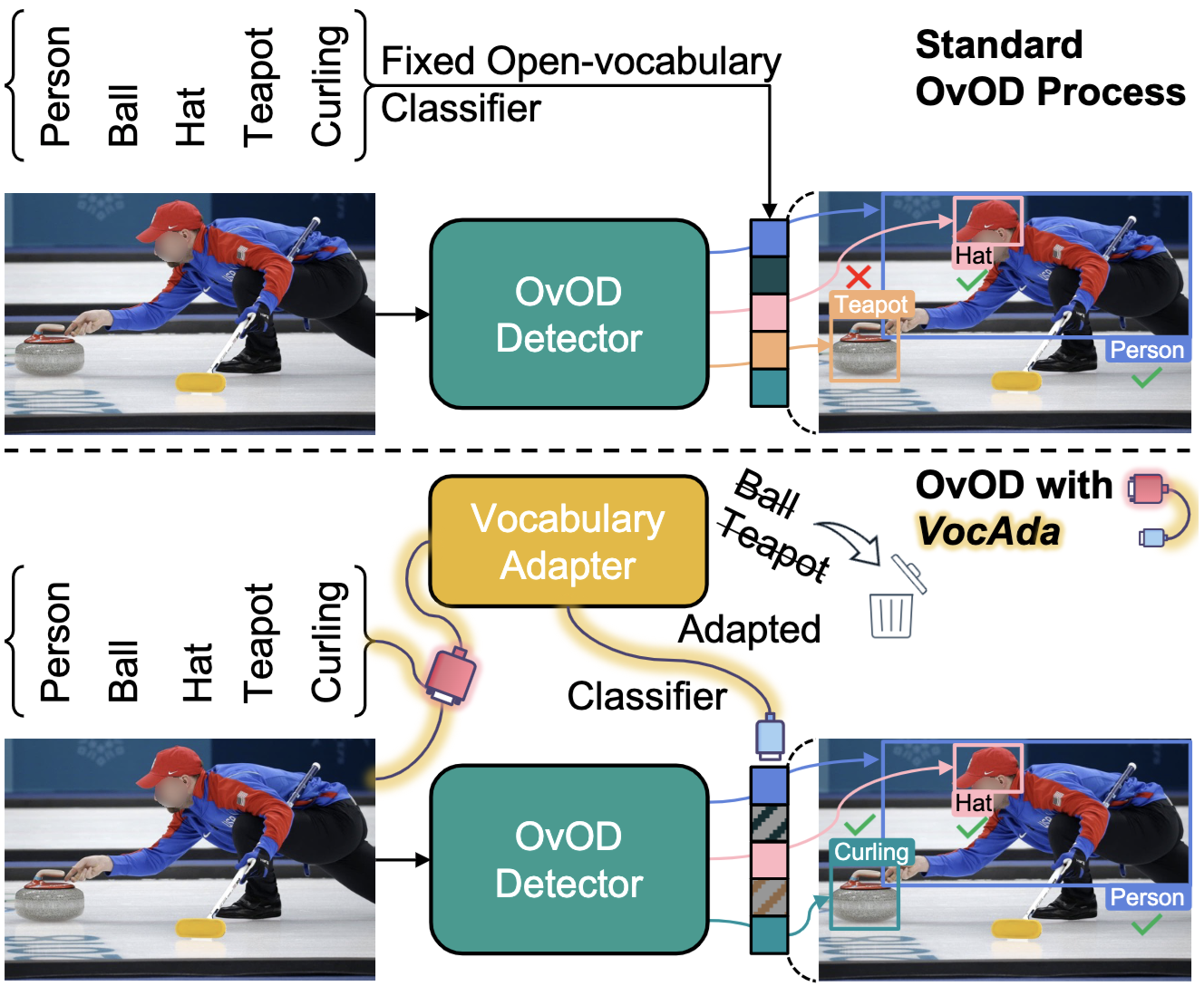

Test-time Vocabulary Adaptation for Language-driven Object DetectionMingxuan Liu , Tayler L. Hayes , Massimiliano Mancini, and 3 more authorsIn IEEE International Conference on Image Processing (ICIP) , 2025Open-vocabulary object detection models allow users to freely specify a class vocabulary in natural language at test time, guiding the detection of desired objects. However, vocabularies can be overly broad or even mis-specified, hampering the overall performance of the detector. In this work, we propose a plug-and-play Vocabulary Adapter (VocAda) to refine the user-defined vocabulary, automatically tailoring it to categories that are relevant for a given image. VocAda does not require any training, it operates at inference time in three steps: i) it uses an image captionner to describe visible objects, ii) it parses nouns from those captions, and iii) it selects relevant classes from the user-defined vocabulary, discarding irrelevant ones. Experiments on COCO and Objects365 with three state-of-the-art detectors show that VocAda consistently improves performance, proving its versatility. The code is open source.

@inproceedings{liu2025test, title = {Test-time Vocabulary Adaptation for Language-driven Object Detection}, author = {Liu, Mingxuan and Hayes, Tayler L. and Mancini, Massimiliano and Ricci, Elisa and Volpi, Riccardo and Csurka, Gabriela}, booktitle = {IEEE International Conference on Image Processing (ICIP)}, year = {2025}, } -

Not Only Text: Exploring Compositionality of Visual Representations in Vision-Language ModelsDavide Berasi , Matteo Farina , Massimiliano Mancini, and 2 more authorsProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025

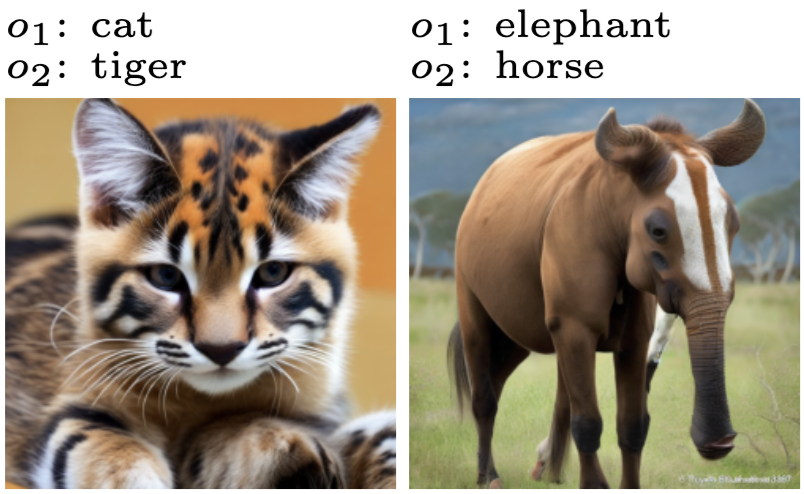

Not Only Text: Exploring Compositionality of Visual Representations in Vision-Language ModelsDavide Berasi , Matteo Farina , Massimiliano Mancini, and 2 more authorsProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025Vision-Language Models (VLMs) learn a shared feature space for text and images, enabling the comparison of inputs of different modalities. While prior works demonstrated that VLMs organize natural language representations into regular structures encoding composite meanings, it remains unclear if compositional patterns also emerge in the visual embedding space. In this work, we investigate compositionality in the image domain, where the analysis of compositional properties is challenged by noise and sparsity of visual data. We address these problems and propose a framework, called Geodesically Decomposable Embeddings (GDE), that approximates image representations with geometry-aware compositional structures in the latent space. We demonstrate that visual embeddings of pre-trained VLMs exhibit a compositional arrangement, and evaluate the effectiveness of this property in the tasks of compositional classification and group robustness. GDE achieves stronger performance in compositional classification compared to its counterpart method that assumes linear geometry of the latent space. Notably, it is particularly effective for group robustness, where we achieve higher results than task-specific solutions. Our results indicate that VLMs can automatically develop a human-like form of compositional reasoning in the visual domain, making their underlying processes more interpretable.

@article{berasi2025not, title = {Not Only Text: Exploring Compositionality of Visual Representations in Vision-Language Models}, author = {Berasi, Davide and Farina, Matteo and Mancini, Massimiliano and Ricci, Elisa and Strisciuglio, Nicola}, journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2025}, } -

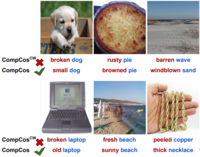

Compositional Caching for Training-free Open-vocabulary Attribute DetectionMarco Garosi , Alessandro Conti , Gaowen Liu , and 2 more authorsIn , 2025

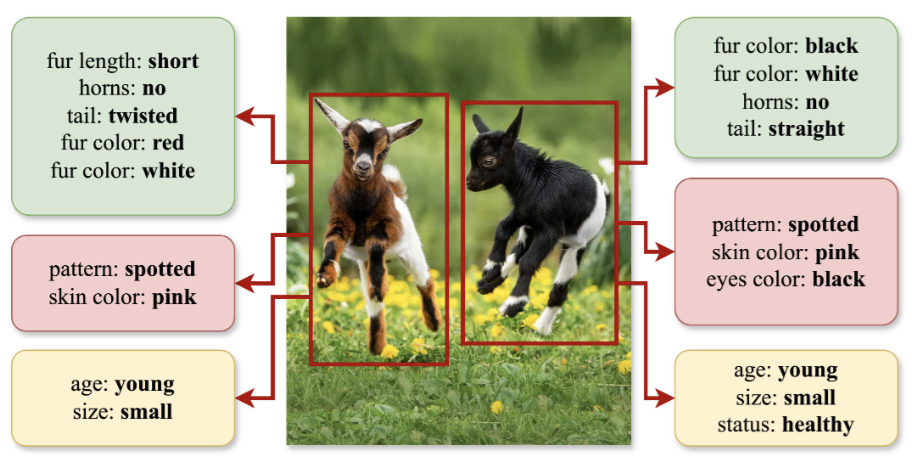

Compositional Caching for Training-free Open-vocabulary Attribute DetectionMarco Garosi , Alessandro Conti , Gaowen Liu , and 2 more authorsIn , 2025Attribute detection is crucial for many computer vision tasks, as it enables systems to describe properties such as color, texture, and material. Current approaches often rely on labor-intensive annotation processes which are inherently limited: objects can be described at an arbitrary level of detail (eg, color vs. color shades), leading to ambiguities when the annotators are not instructed carefully. Furthermore, they operate within a predefined set of attributes, reducing scalability and adaptability to unforeseen downstream applications. We present Compositional Caching (ComCa), a training-free method for open-vocabulary attribute detection that overcomes these constraints. ComCa requires only the list of target attributes and objects as input, using them to populate an auxiliary cache of images by leveraging web-scale databases and Large Language Models to determine attribute-object compatibility. To account for the compositional nature of attributes, cache images receive soft attribute labels. Those are aggregated at inference time based on the similarity between the input and cache images, refining the predictions of underlying Vision-Language Models (VLMs). Importantly, our approach is model-agnostic, compatible with various VLMs. Experiments on public datasets demonstrate that ComCa significantly outperforms zero-shot and cache-based baselines, competing with recent training-based methods, proving that a carefully designed training-free approach can successfully address open-vocabulary attribute detection.

@inproceedings{garosi2025comca, title = {Compositional Caching for Training-free Open-vocabulary Attribute Detection}, author = {Garosi, Marco and Conti, Alessandro and Liu, Gaowen and Ricci, Elisa and Mancini, Massimiliano}, journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2025}, } -

Classifier-to-Bias: Toward Unsupervised Automatic Bias Detection for Visual ClassifiersQuentin Guimard , Moreno D’Incà , Elia Peruzzo , and 2 more authorsProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025

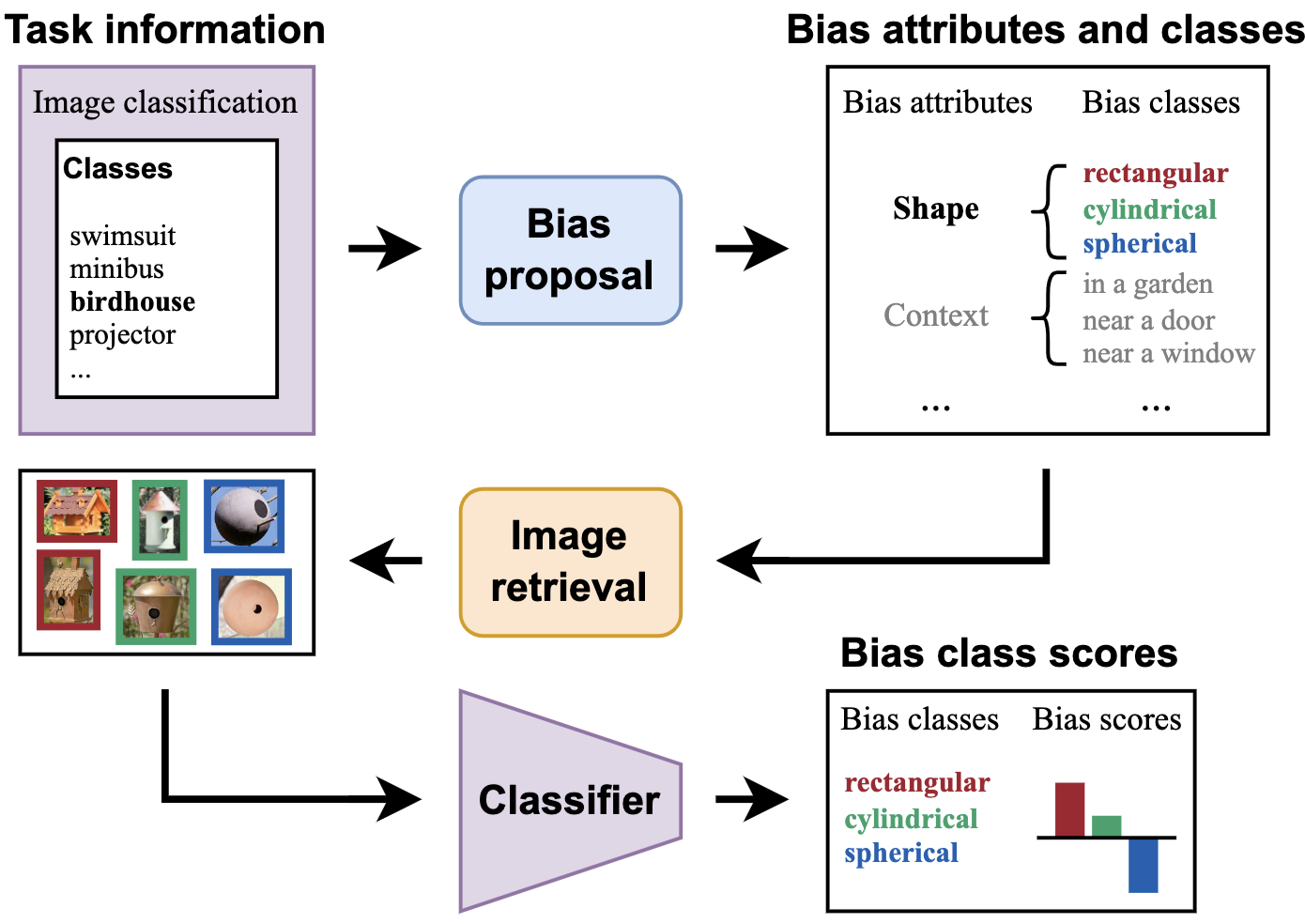

Classifier-to-Bias: Toward Unsupervised Automatic Bias Detection for Visual ClassifiersQuentin Guimard , Moreno D’Incà , Elia Peruzzo , and 2 more authorsProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025A person downloading a pre-trained model from the web should be aware of its biases. Existing approaches for bias identification rely on datasets containing labels for the task of interest, something that a non-expert may not have access to, or may not have the necessary resources to collect: this greatly limits the number of tasks where model biases can be identified. In this work, we present Classifier-to-Bias (C2B), the first bias discovery framework that works without access to any labeled data: it only relies on a textual description of the classification task to identify biases in the target classification model. This description is fed to a large language model to generate bias proposals and corresponding captions depicting biases together with task-specific target labels. A retrieval model collects images for those captions, which are then used to assess the accuracy of the model wrt the given biases. C2B is training-free, does not require any annotations, has no constraints on the list of biases, and can be applied to any pre-trained model on any classification task. Experiments on two publicly available datasets show that C2B discovers biases beyond those of the original datasets and outperforms a recent state-of-the-art bias detection baseline that relies on task-specific annotations, being a promising first step toward addressing task-agnostic unsupervised bias detection.

@article{guimard2025c2b, title = {Classifier-to-Bias: Toward Unsupervised Automatic Bias Detection for Visual Classifiers}, author = {Guimard, Quentin and D'Incà, Moreno and Peruzzo, Elia and Mancini, Massimiliano and Ricci, Elisa}, journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2025}, } -

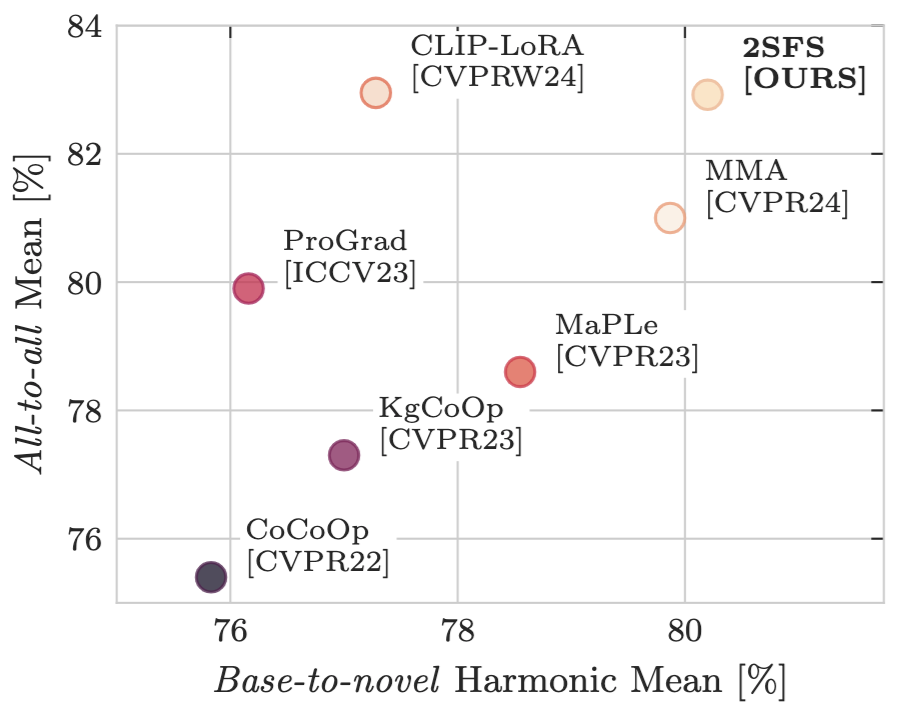

Rethinking Few-Shot Adaptation of Vision-Language Models in Two StagesMatteo Farina , Massimiliano Mancini, Giovanni Iacca , and 1 more authorProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025

Rethinking Few-Shot Adaptation of Vision-Language Models in Two StagesMatteo Farina , Massimiliano Mancini, Giovanni Iacca , and 1 more authorProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025An old-school recipe for training a classifier is to (i) learn a good feature extractor and (ii) optimize a linear layer atop. When only a handful of samples are available per category, as in Few-Shot Adaptation (FSA), data are insufficient to fit a large number of parameters, rendering the above impractical. This is especially true with large pre-trained Vision-Language Models (VLMs), which motivated successful research at the intersection of Parameter-Efficient Fine-tuning (PEFT) and FSA. In this work, we start by analyzing the learning dynamics of PEFT techniques when trained on few-shot data from only a subset of categories, referred to as the "base" classes. We show that such dynamics naturally splits into two distinct phases: (i) task-level feature extraction and (ii) specialization to the available concepts. To accommodate this dynamic, we then depart from prompt- or adapter-based methods and tackle FSA differently. Specifically, given a fixed computational budget, we split it to (i) learn a task-specific feature extractor via PEFT and (ii) train a linear classifier on top. We call this scheme Two-Stage Few-Shot Adaptation (2SFS). Differently from established methods, our scheme enables a novel form of selective inference at a category level, i.e., at test time, only novel categories are embedded by the adapted text encoder, while embeddings of base categories are available within the classifier. Results with fixed hyperparameters across two settings, three backbones, and eleven datasets, show that 2SFS matches or surpasses the state-of-the-art, while established methods degrade significantly across settings.

@article{farina2025rethinking, title = {Rethinking Few-Shot Adaptation of Vision-Language Models in Two Stages}, author = {Farina, Matteo and Mancini, Massimiliano and Iacca, Giovanni and Ricci, Elisa}, journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2025}, } -

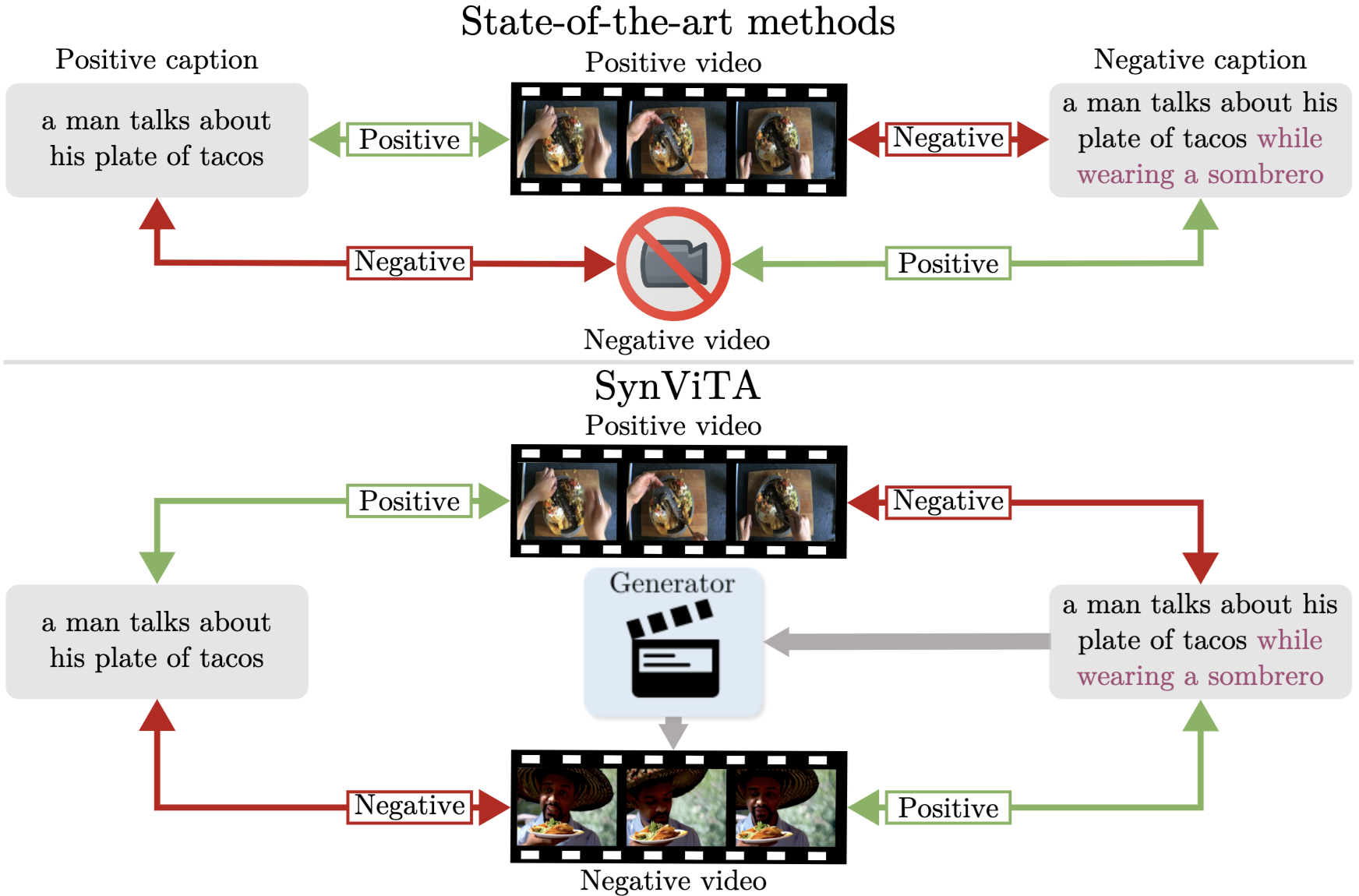

Can Text-to-Video Generation help Video-Language Alignment?Luca Zanella , Massimiliano Mancini, Willi Menapace , and 3 more authorsProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025

Can Text-to-Video Generation help Video-Language Alignment?Luca Zanella , Massimiliano Mancini, Willi Menapace , and 3 more authorsProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025Recent video-language alignment models are trained on sets of videos, each with an associated positive caption and a negative caption generated by large language models. A problem with this procedure is that negative captions may introduce linguistic biases, i.e., concepts are seen only as negatives and never associated with a video. While a solution would be to collect videos for the negative captions, existing databases lack the fine-grained variations needed to cover all possible negatives. In this work, we study whether synthetic videos can help to overcome this issue. Our preliminary analysis with multiple generators shows that, while promising on some tasks, synthetic videos harm the performance of the model on others. We hypothesize this issue is linked to noise (semantic and visual) in the generated videos and develop a method, SynViTA, that accounts for those. SynViTA dynamically weights the contribution of each synthetic video based on how similar its target caption is w.r.t. the real counterpart. Moreover, a semantic consistency loss makes the model focus on fine-grained differences across captions, rather than differences in video appearance. Experiments show that, on average, SynViTA improves over existing methods on VideoCon test sets and SSv2-Temporal, SSv2-Events, and ATP-Hard benchmarks, being a first promising step for using synthetic videos when learning video-language models.

@article{zanella2025synvita, title = {Can Text-to-Video Generation help Video-Language Alignment?}, author = {Zanella, Luca and Mancini, Massimiliano and Menapace, Willi and Tulyakov, Sergey and Wang, Yiming and Ricci, Elisa}, journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2025}, } -

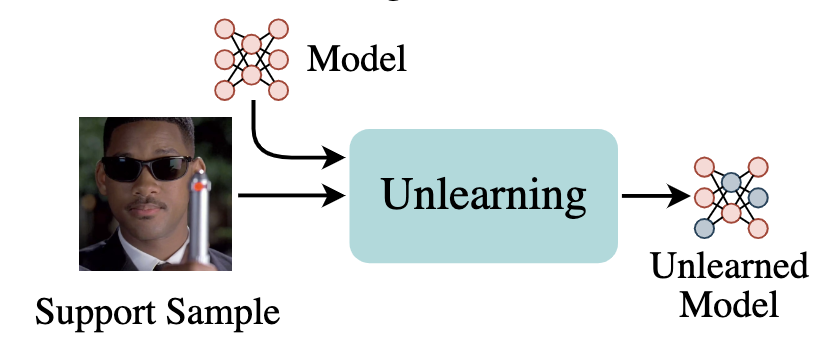

Unlearning Personal Data from a Single ImageThomas De Min , Massimiliano Mancini, Stéphane Lathuilière , and 2 more authorsIn Transactions on Machine Learning Research , 2025

Unlearning Personal Data from a Single ImageThomas De Min , Massimiliano Mancini, Stéphane Lathuilière , and 2 more authorsIn Transactions on Machine Learning Research , 2025Machine unlearning aims to erase data from a model as if the latter never saw them during training. While existing approaches unlearn information from complete or partial access to the training data, this access can be limited over time due to privacy regulations. Currently, no setting or benchmark exists to probe the effectiveness of unlearning methods in such scenarios. To fill this gap, we propose a novel task we call One-Shot Unlearning of Personal Identities (1-SHUI) that evaluates unlearning models when the training data is not available. We focus on unlearning identity data, which is specifically relevant due to current regulations requiring personal data deletion after training. To cope with data absence, we expect users to provide a portraiting picture to aid unlearning. We design requests on CelebA, CelebA-HQ, and MUFAC with different unlearning set sizes to evaluate applicable methods in 1-SHUI. Moreover, we propose MetaUnlearn, an effective method that meta-learns to forget identities from a single image. Our findings indicate that existing approaches struggle when data availability is limited, especially when there is a dissimilarity between the provided samples and the training data.

@inproceedings{demin2025unlearning, title = {Unlearning Personal Data from a Single Image}, author = {De Min, Thomas and Mancini, Massimiliano and Lathuilière, Stéphane and Roy, Subhankar and Ricci, Elisa}, booktitle = {Transactions on Machine Learning Research}, year = {2025}, } -

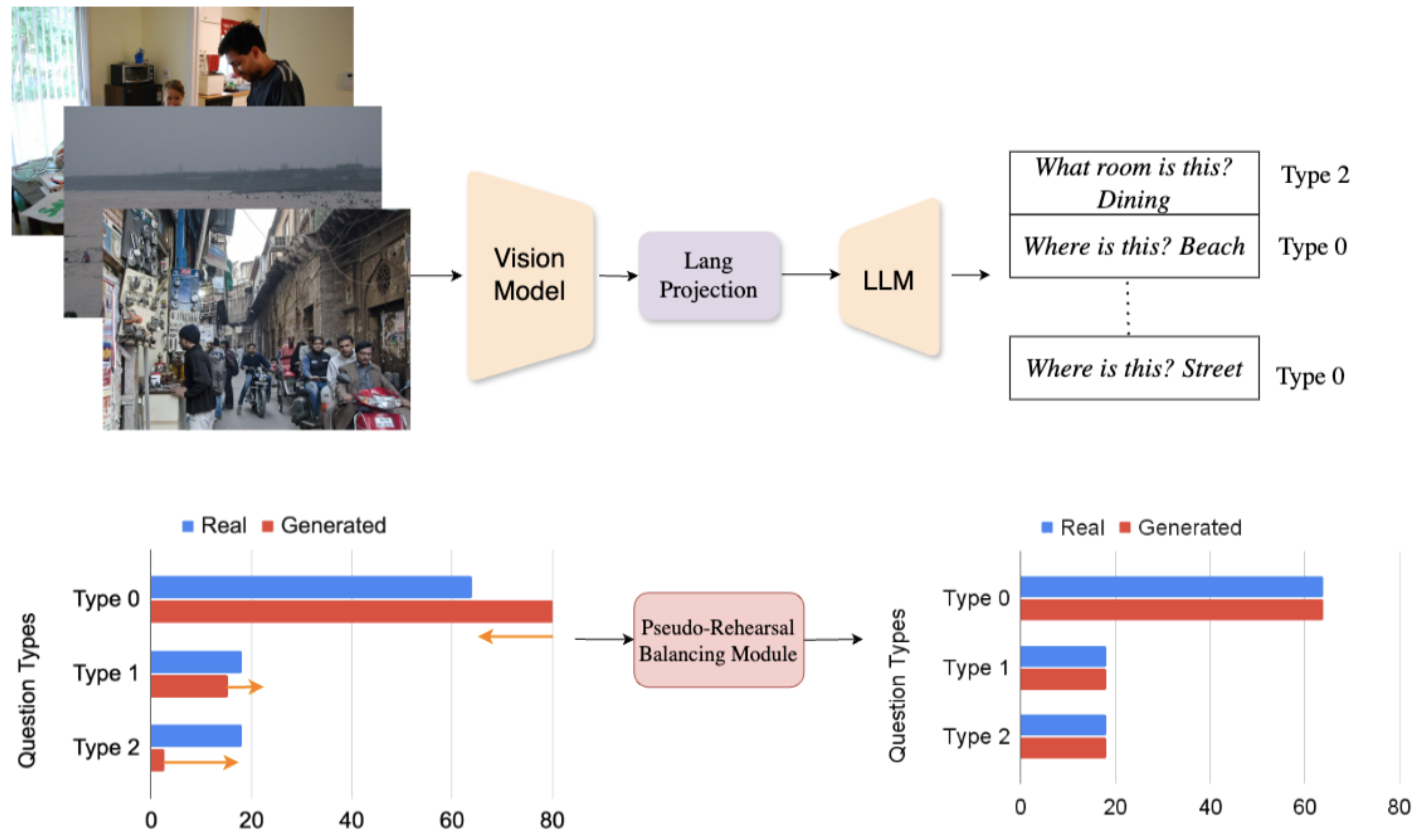

One VLM to Keep it Learning: Generation and Balancing for Data-free Continual Visual Question AnsweringDeepayan Das , Davide Talon , Massimiliano Mancini, and 2 more authorsIn IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2025

One VLM to Keep it Learning: Generation and Balancing for Data-free Continual Visual Question AnsweringDeepayan Das , Davide Talon , Massimiliano Mancini, and 2 more authorsIn IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2025Vision-Language Models (VLMs) have shown significant promise in Visual Question Answering (VQA) tasks by leveraging web-scale multimodal datasets. However, these models often struggle with continual learning due to catastrophic forgetting when adapting to new tasks. As an effective remedy to mitigate catastrophic forgetting, rehearsal strategy uses the data of past tasks upon learning new task. However, such strategy incurs the need of storing past data, which might not be feasible due to hardware constraints or privacy concerns. In this work, we propose the first data-free method that leverages the language generation capability of a VLM, instead of relying on external models, to produce pseudo-rehearsal data for addressing continual VQA. Our proposal, named as GaB, generates pseudo-rehearsal data by posing previous task questions on new task data. Yet, despite being effective, the distribution of generated questions skews towards the most frequently posed questions due to the limited and task-specific training data. To mitigate this issue, we introduce a pseudo-rehearsal balancing module that aligns the generated data towards the ground-truth data distribution using either the question meta-statistics or an unsupervised clustering method. We evaluate our proposed method on two recent benchmarks, i.e., VQACL-VQAv2 and CLOVE-function benchmarks. GaB outperforms all the data-free baselines with substantial improvement in maintaining VQA performance across evolving tasks, while being on-par with methods with access to the past data.

@inproceedings{das2025onevlm, title = {One VLM to Keep it Learning: Generation and Balancing for Data-free Continual Visual Question Answering}, author = {Das, Deepayan and Talon, Davide and Mancini, Massimiliano and Wang, Yiming and Ricci, Elisa}, booktitle = {IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)}, year = {2025}, } -

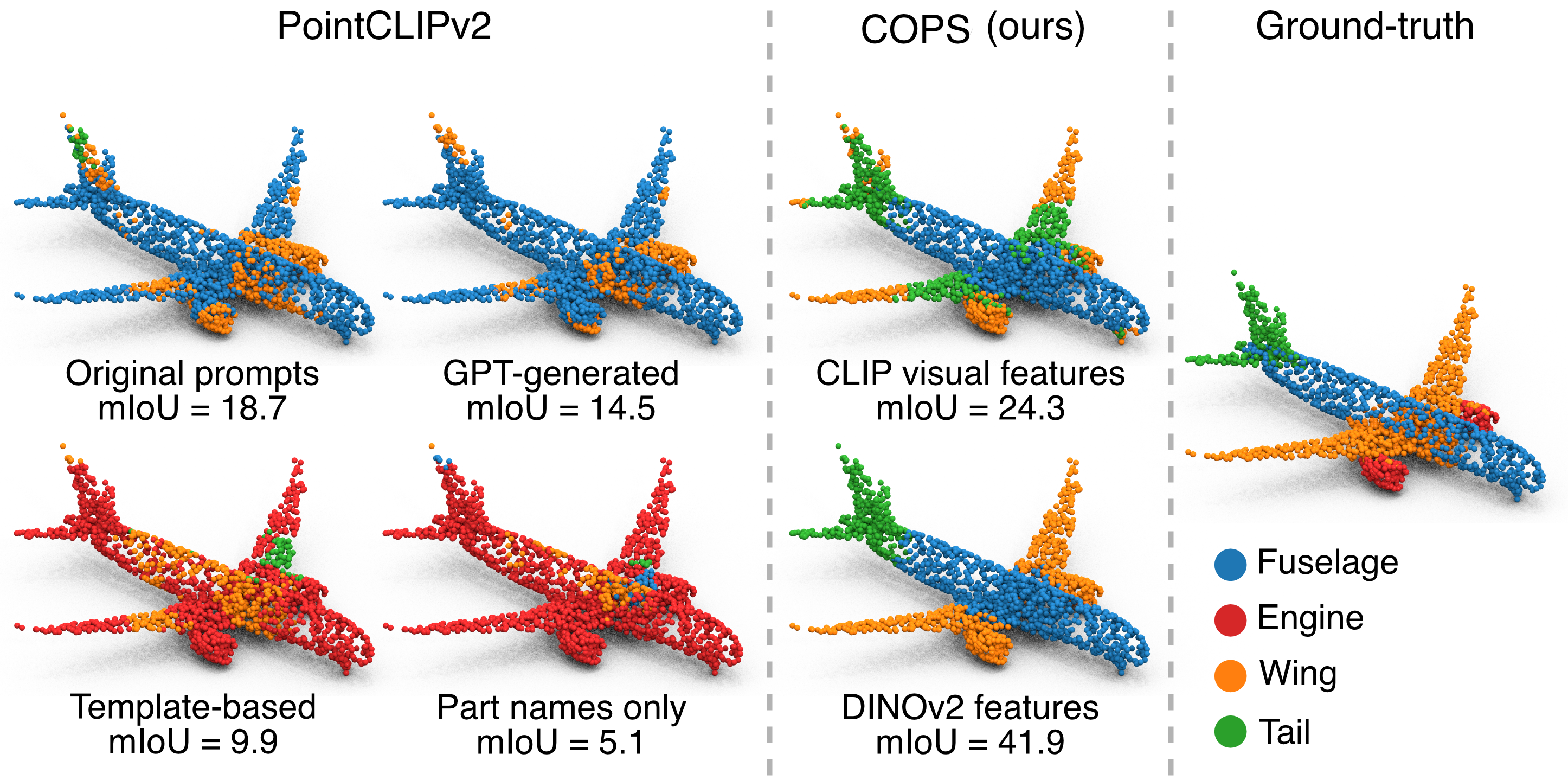

3D Part Segmentation via Geometric Aggregation of 2D Visual FeaturesMarco Garosi , Riccardo Tedoldi , Davide Boscaini , and 3 more authorsIn IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2025

3D Part Segmentation via Geometric Aggregation of 2D Visual FeaturesMarco Garosi , Riccardo Tedoldi , Davide Boscaini , and 3 more authorsIn IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , 2025Supervised 3D part segmentation models are tailored for a fixed set of objects and parts, limiting their transferability to open-set, real-world scenarios. Recent works have explored vision-language models (VLMs) as a promising alternative, using multi-view rendering and textual prompting to identify object parts. However, naively applying VLMs in this context introduces several drawbacks, such as the need for meticulous prompt engineering, and fails to leverage the 3D geometric structure of objects. To address these limitations, we propose COPS, a COmprehensive model for Parts Segmentation that blends the semantics extracted from visual concepts and 3D geometry to effectively identify object parts. COPS renders a point cloud from multiple viewpoints, extracts 2D features, projects them back to 3D, and uses a novel geometric-aware feature aggregation procedure to ensure spatial and semantic consistency. Finally, it clusters points into parts and labels them. We demonstrate that COPS is efficient, scalable, and achieves zero-shot state-of-the-art performance across five datasets, covering synthetic and real-world data, texture-less and coloured objects, as well as rigid and non-rigid shapes.

@inproceedings{garosi20253d, title = {3D Part Segmentation via Geometric Aggregation of 2D Visual Features}, author = {Garosi, Marco and Tedoldi, Riccardo and Boscaini, Davide and Mancini, Massimiliano and Sebe, Nicu and Poiesi, Fabio}, booktitle = {IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)}, year = {2025}, }

2024

-

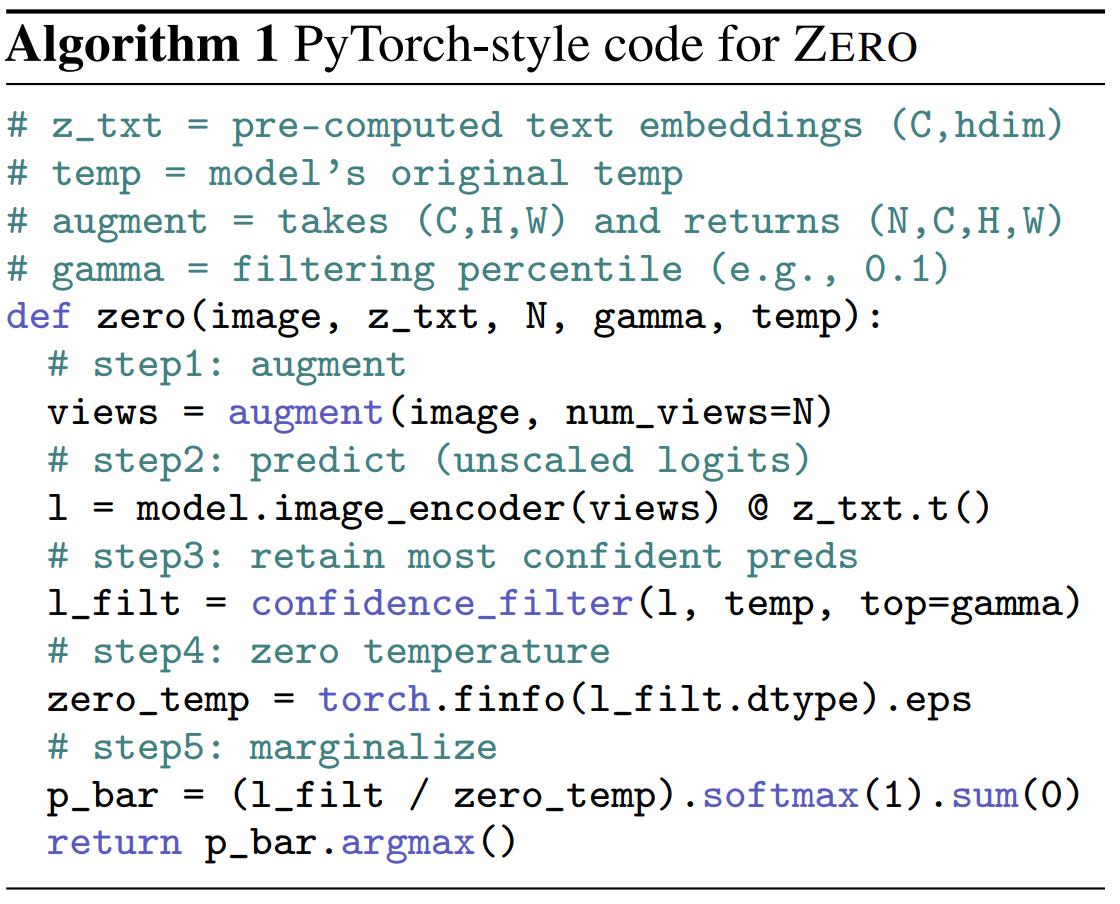

Frustratingly Easy Test-Time Adaptation of Vision-Language ModelsMatteo Farina , Gianni Franchi , Giovanni Iacca , and 2 more authorsAdvances in Neural Information Processing Systems (NeurIPS), Dec 2024

Frustratingly Easy Test-Time Adaptation of Vision-Language ModelsMatteo Farina , Gianni Franchi , Giovanni Iacca , and 2 more authorsAdvances in Neural Information Processing Systems (NeurIPS), Dec 2024Vision-Language Models seamlessly discriminate among arbitrary semantic categories, yet they still suffer from poor generalization when presented with challenging examples. For this reason, Episodic Test-Time Adaptation (TTA) strategies have recently emerged as powerful techniques to adapt VLMs in the presence of a single unlabeled image. The recent literature on TTA is dominated by the paradigm of prompt tuning by Marginal Entropy Minimization, which, relying on online backpropagation, inevitably slows down inference while increasing memory. In this work, we theoretically investigate the properties of this approach and unveil that a surprisingly strong TTA method lies dormant and hidden within it. We term this approach ZERO (TTA with “zero” temperature), whose design is both incredibly effective and frustratingly simple: augment N times, predict, retain the most confident predictions, and marginalize after setting the Softmax temperature to zero. Remarkably, ZERO requires a single batched forward pass through the vision encoder only and no backward passes. We thoroughly evaluate our approach following the experimental protocol established in the literature and show that ZERO largely surpasses or compares favorably w.r.t. the state-of-the-art while being almost 10× faster and 13× more memory friendly than standard Test-Time Prompt Tuning. Thanks to its simplicity and comparatively negligible computation, ZERO can serve as a strong baseline for future work in this field. Code will be available.

@article{farina2024frustratingly, title = {Frustratingly Easy Test-Time Adaptation of Vision-Language Models}, author = {Farina, Matteo and Franchi, Gianni and Iacca, Giovanni and Mancini, Massimiliano and Ricci, Elisa}, journal = {Advances in Neural Information Processing Systems (NeurIPS)}, year = {2024}, month = dec, } -

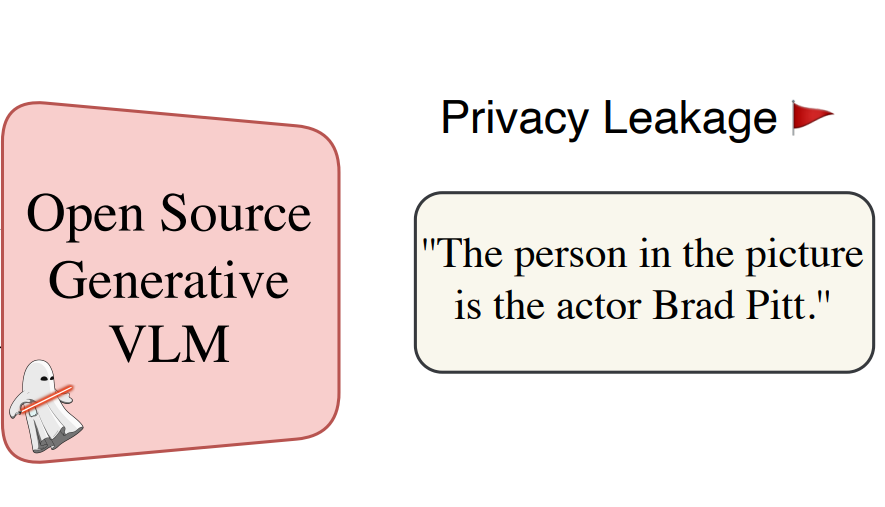

The Phantom Menace: Unmasking Privacy Leakages in Vision-Language ModelsSimone Caldarella , Massimiliano Mancini, Elisa Ricci , and 1 more authorIn European Conference on Computer Vision (ECCV) Workshops , Oct 2024

The Phantom Menace: Unmasking Privacy Leakages in Vision-Language ModelsSimone Caldarella , Massimiliano Mancini, Elisa Ricci , and 1 more authorIn European Conference on Computer Vision (ECCV) Workshops , Oct 2024Vision-Language Models (VLMs) combine visual and textual understanding, rendering them well-suited for diverse tasks like generating image captions and answering visual questions across various domains. However, these capabilities are built upon training on large amount of uncurated data crawled from the web. The latter may include sensitive information that VLMs could memorize and leak, raising significant privacy concerns. In this paper, we assess whether these vulnerabilities exist, focusing on identity leakage. Our study leads to three key findings: (i) VLMs leak identity information, even when the vision-language alignment and the fine-tuning use anonymized data; (ii) context has little influence on identity leakage; (iii) simple, widely used anonymization techniques, like blurring, are not sufficient to address the problem. These findings underscore the urgent need for robust privacy protection strategies when deploying VLMs. Ethical awareness and responsible development practices are essential to mitigate these risks.

@inproceedings{caldarella2024phantom, title = {The Phantom Menace: Unmasking Privacy Leakages in Vision-Language Models}, author = {Caldarella, Simone and Mancini, Massimiliano and Ricci, Elisa and Aljundi, Rahaf}, booktitle = {European Conference on Computer Vision (ECCV) Workshops}, year = {2024}, month = oct, } -

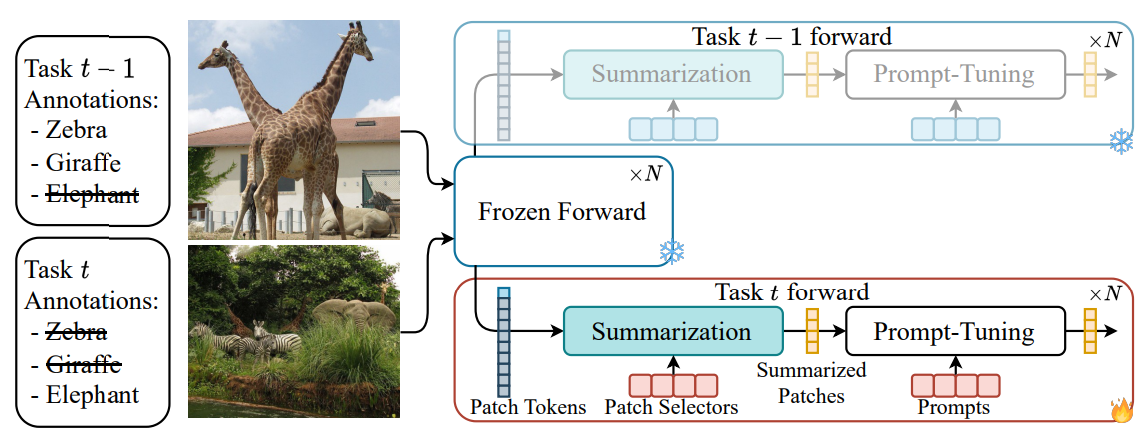

Less is more: Summarizing Patch Tokens for efficient Multi-Label Class-Incremental LearningThomas De Min , Massimiliano Mancini, Stéphane Lathuilière , and 2 more authorsIn Conference on Lifelong Learning Agents , Oct 2024

Less is more: Summarizing Patch Tokens for efficient Multi-Label Class-Incremental LearningThomas De Min , Massimiliano Mancini, Stéphane Lathuilière , and 2 more authorsIn Conference on Lifelong Learning Agents , Oct 2024Prompt tuning has emerged as an effective rehearsal-free technique for class-incremental learning (CIL) that learns a tiny set of task-specific parameters (or prompts) to instruct a pre-trained transformer to learn on a sequence of tasks. Albeit effective, prompt tuning methods do not lend well in the multi-label class incremental learning (MLCIL) scenario (where an image contains multiple foreground classes) due to the ambiguity in selecting the correct prompt(s) corresponding to different foreground objects belonging to multiple tasks. To circumvent this issue we propose to eliminate the prompt selection mechanism by maintaining task-specific pathways, which allow us to learn representations that do not interact with the ones from the other tasks. Since independent pathways in truly incremental scenarios will result in an explosion of computation due to the quadratically complex multi-head self-attention (MSA) operation in prompt tuning, we propose to reduce the original patch token embeddings into summarized tokens. Prompt tuning is then applied to these fewer summarized tokens to compute the final representation. Our proposed method Multi-Label class incremental learning via summarising pAtch tokeN Embeddings (MULTI-LANE) enables learning disentangled task-specific representations in MLCIL while ensuring fast inference. We conduct experiments in common benchmarks and demonstrate that our MULTI-LANE achieves a new state-of-the-art in MLCIL. Additionally, we show that MULTI-LANE is also competitive in the CIL setting.

@inproceedings{demin2024multilane, title = {Less is more: Summarizing Patch Tokens for efficient Multi-Label Class-Incremental Learning}, author = {De Min, Thomas and Mancini, Massimiliano and Lathuilière, Stéphane and Roy, Subhankar and Ricci, Elisa}, booktitle = {Conference on Lifelong Learning Agents}, year = {2024}, } -

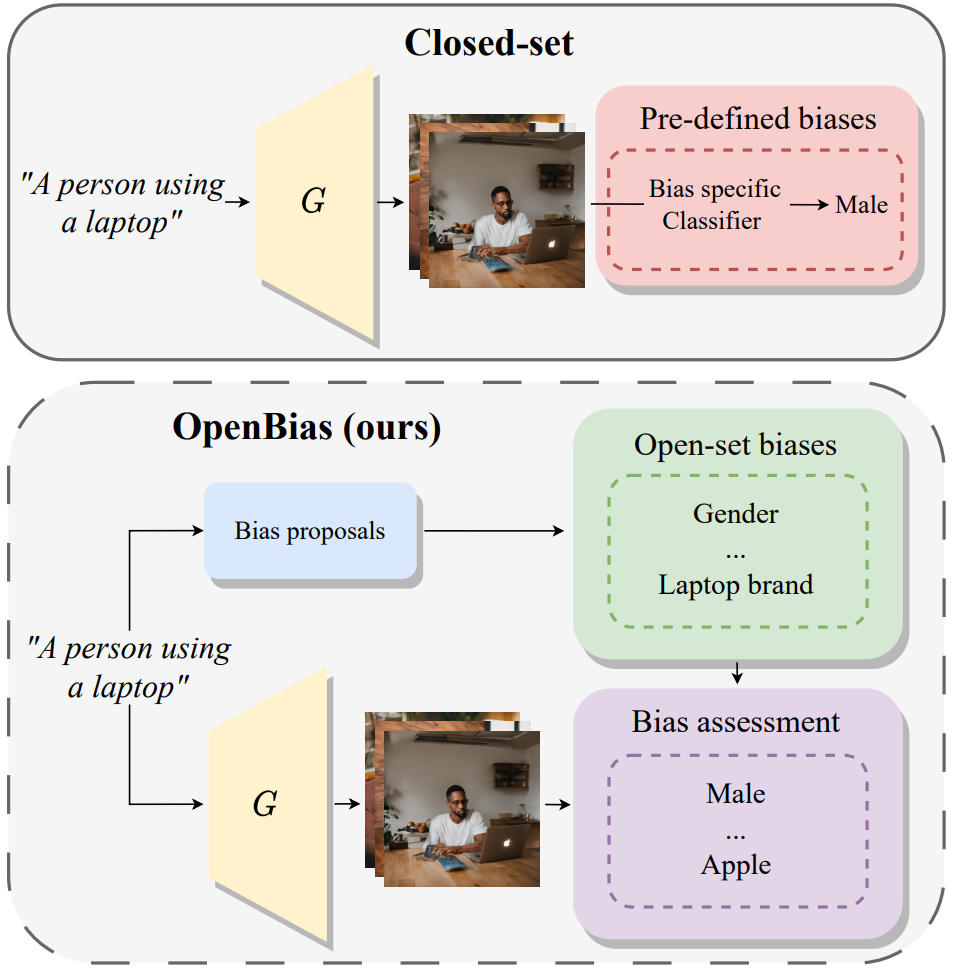

OpenBias: Open-set Bias Detection in Text-to-Image Generative ModelsMoreno D’Incà , Elia Peruzzo , Massimiliano Mancini, and 6 more authorsIEEE/CVF Conference on Computer Vision and Pattern Recognition, Oct 2024

OpenBias: Open-set Bias Detection in Text-to-Image Generative ModelsMoreno D’Incà , Elia Peruzzo , Massimiliano Mancini, and 6 more authorsIEEE/CVF Conference on Computer Vision and Pattern Recognition, Oct 2024Text-to-image generative models are becoming increasingly popular and accessible to the general public. As these models see large-scale deployments it is necessary to deeply investigate their safety and fairness to not disseminate and perpetuate any kind of biases. However existing works focus on detecting closed sets of biases defined a priori limiting the studies to well-known concepts. In this paper we tackle the challenge of open-set bias detection in text-to-image generative models presenting OpenBias a new pipeline that identifies and quantifies the severity of biases agnostically without access to any precompiled set. OpenBias has three stages. In the first phase we leverage a Large Language Model (LLM) to propose biases given a set of captions. Secondly the target generative model produces images using the same set of captions. Lastly a Vision Question Answering model recognizes the presence and extent of the previously proposed biases. We study the behavior of Stable Diffusion 1.5 2 and XL emphasizing new biases never investigated before. Via quantitative experiments we demonstrate that OpenBias agrees with current closed-set bias detection methods and human judgement.

@article{dinca2024openbias, title = {OpenBias: Open-set Bias Detection in Text-to-Image Generative Models}, author = {D'Incà, Moreno and Peruzzo, Elia and Mancini, Massimiliano and Xu, Dejia and Goel, Vidit and Xu, Xingqian and Wang, Zhangyang and Shi, Humphrey and Sebe, Nicu}, journal = {IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2024}, } -

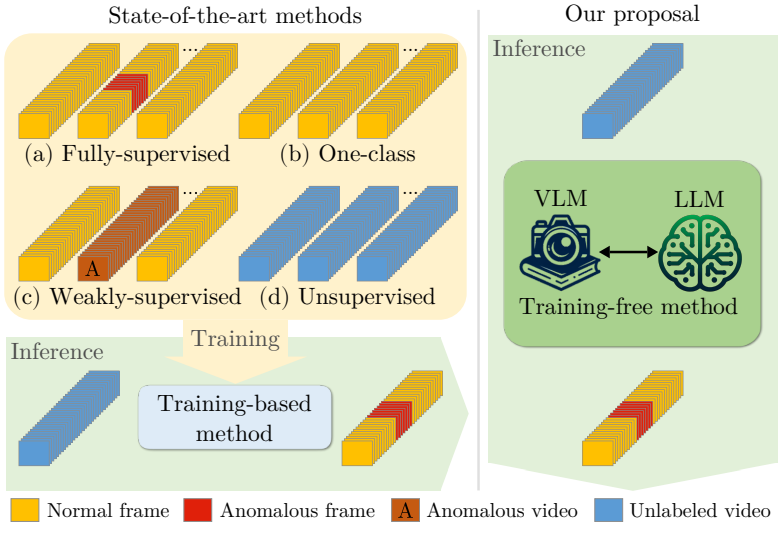

Harnessing Large Language Models for Training-free Video Anomaly DetectionLuca Zanella , Willi Menapace , Massimiliano Mancini, and 2 more authorsIEEE/CVF Conference on Computer Vision and Pattern Recognition, Oct 2024

Harnessing Large Language Models for Training-free Video Anomaly DetectionLuca Zanella , Willi Menapace , Massimiliano Mancini, and 2 more authorsIEEE/CVF Conference on Computer Vision and Pattern Recognition, Oct 2024Video anomaly detection (VAD) aims to temporally locate abnormal events in a video. Existing works mostly rely on training deep models to learn the distribution of normality with either video-level supervision one-class supervision or in an unsupervised setting. Training-based methods are prone to be domain-specific thus being costly for practical deployment as any domain change will involve data collection and model training. In this paper we radically depart from previous efforts and propose LAnguage-based VAD (LAVAD) a method tackling VAD in a novel training-free paradigm exploiting the capabilities of pre-trained large language models (LLMs) and existing vision-language models (VLMs). We leverage VLM-based captioning models to generate textual descriptions for each frame of any test video. With the textual scene description we then devise a prompting mechanism to unlock the capability of LLMs in terms of temporal aggregation and anomaly score estimation turning LLMs into an effective video anomaly detector. We further leverage modality-aligned VLMs and propose effective techniques based on cross-modal similarity for cleaning noisy captions and refining the LLM-based anomaly scores. We evaluate LAVAD on two large datasets featuring real-world surveillance scenarios (UCF-Crime and XD-Violence) showing that it outperforms both unsupervised and one-class methods without requiring any training or data collection.

@article{zanella2024lavad, title = {Harnessing Large Language Models for Training-free Video Anomaly Detection}, author = {Zanella, Luca and Menapace, Willi and Mancini, Massimiliano and Wang, Yiming and Ricci, Elisa}, journal = {IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2024}, } -

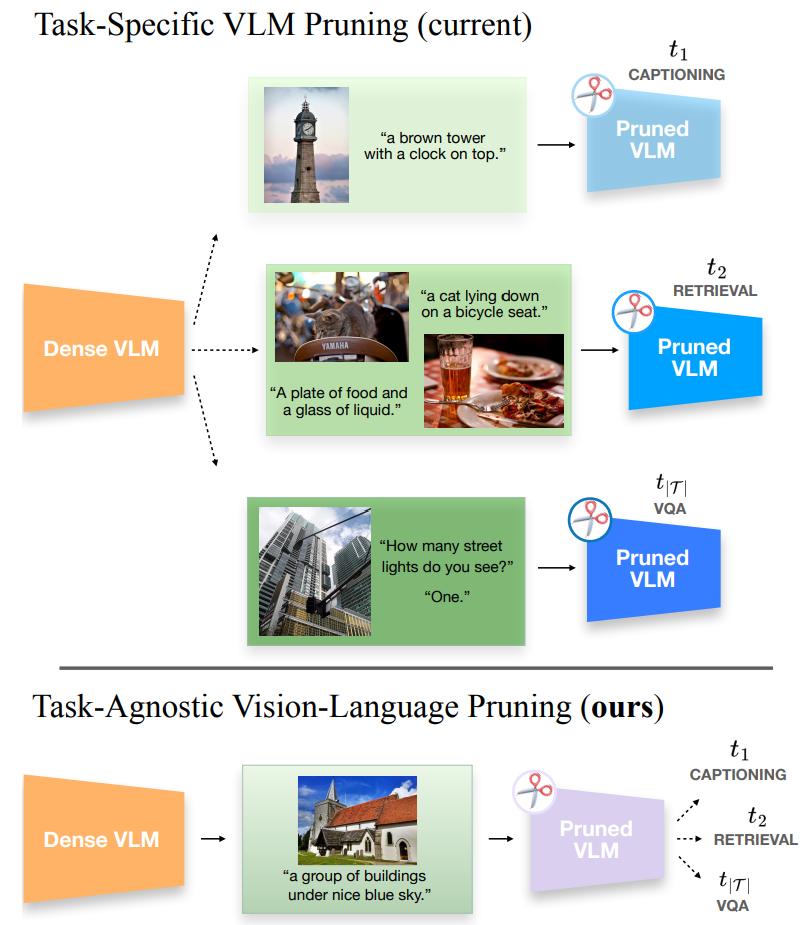

MULTIFLOW: Shifting Towards Task-Agnostic Vision-Language PruningMatteo Farina , Massimiliano Mancini, Elia Cunegatti , and 3 more authorsIEEE/CVF Conference on Computer Vision and Pattern Recognition, Oct 2024

MULTIFLOW: Shifting Towards Task-Agnostic Vision-Language PruningMatteo Farina , Massimiliano Mancini, Elia Cunegatti , and 3 more authorsIEEE/CVF Conference on Computer Vision and Pattern Recognition, Oct 2024While excellent in transfer learning Vision-Language models (VLMs) come with high computational costs due to their large number of parameters. To address this issue removing parameters via model pruning is a viable solution. However existing techniques for VLMs are task-specific and thus require pruning the network from scratch for each new task of interest. In this work we explore a new direction: Task-Agnostic Vision-Language Pruning (TA-VLP). Given a pretrained VLM the goal is to find a unique pruned counterpart transferable to multiple unknown downstream tasks. In this challenging setting the transferable representations already encoded in the pretrained model are a key aspect to preserve. Thus we propose Multimodal Flow Pruning (MULTIFLOW) a first gradient-free pruning framework for TA-VLP where: (i) the importance of a parameter is expressed in terms of its magnitude and its information flow by incorporating the saliency of the neurons it connects; and (ii) pruning is driven by the emergent (multimodal) distribution of the VLM parameters after pretraining. We benchmark eight state-of-the-art pruning algorithms in the context of TA-VLP experimenting with two VLMs three vision-language tasks and three pruning ratios. Our experimental results show that MULTIFLOW outperforms recent sophisticated combinatorial competitors in the vast majority of the cases paving the way towards addressing TA-VLP. The code is publicly available at https://github.com/FarinaMatteo/multiflow.

@article{farina2024multiflow, title = {MULTIFLOW: Shifting Towards Task-Agnostic Vision-Language Pruning}, author = {Farina, Matteo and Mancini, Massimiliano and Cunegatti, Elia and Liu, Gaowen and Iacca, Giovanni and Ricci, Elisa}, journal = {IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2024}, } -

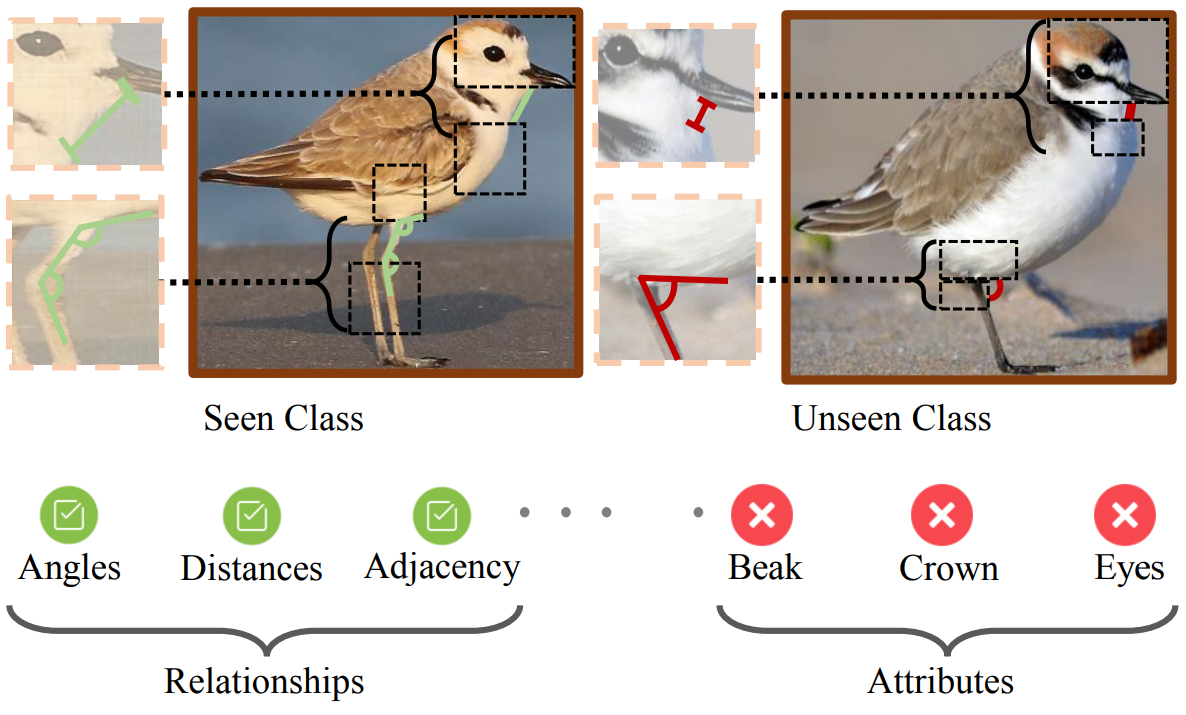

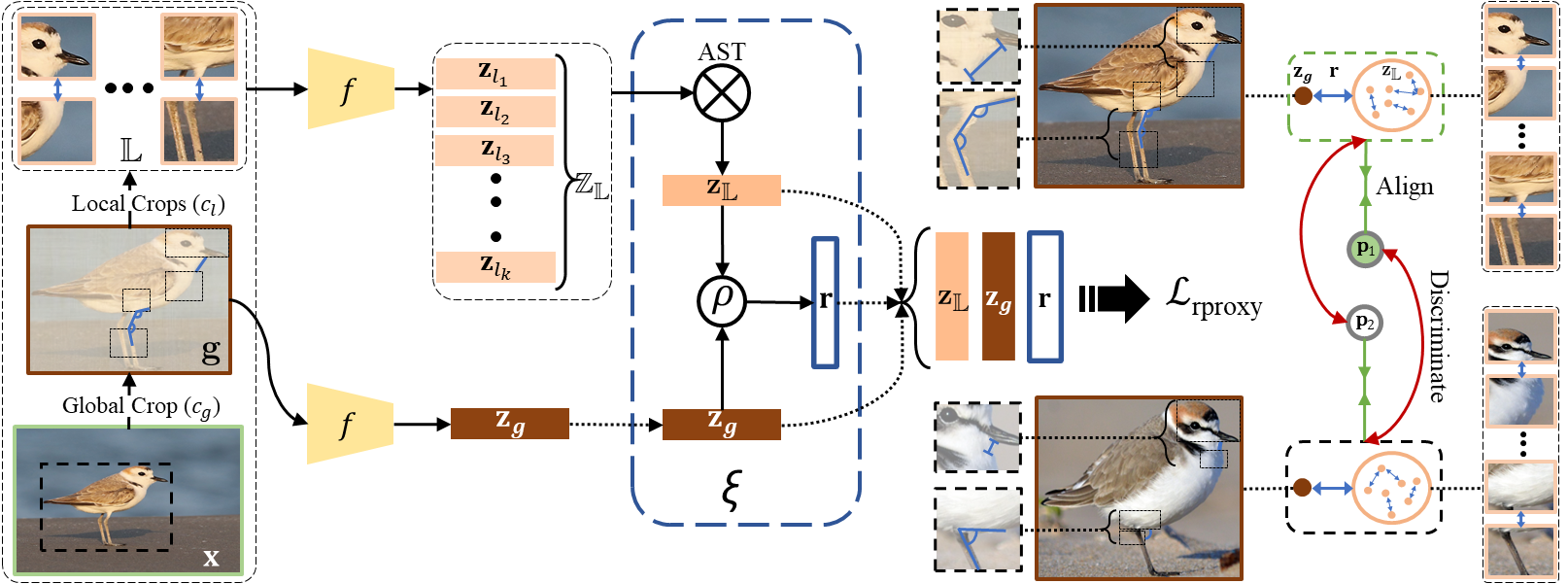

Relational Proxies: Fine-Grained Relationships as Zero-Shot DiscriminatorsAbhra Chaudhuri , Massimiliano Mancini, Zeynep Akata , and 1 more authorIEEE Transactions on Pattern Analysis and Machine Intelligence, Oct 2024

Relational Proxies: Fine-Grained Relationships as Zero-Shot DiscriminatorsAbhra Chaudhuri , Massimiliano Mancini, Zeynep Akata , and 1 more authorIEEE Transactions on Pattern Analysis and Machine Intelligence, Oct 2024Visual categories that largely share the same set of local parts cannot be discriminated based on part information alone, as they mostly differ in the way the local parts relate to the overall global structure of the object. We propose Relational Proxies , a novel approach that leverages the relational information between the global and local views of an object for encoding its semantic label, even for categories it has not encountered during training. Starting with a rigorous formalization of the notion of distinguishability between categories that share attributes, we prove the necessary and sufficient conditions that a model must satisfy in order to learn the underlying decision boundaries to tell them apart. We design Relational Proxies based on our theoretical findings and evaluate it on seven challenging fine-grained benchmark datasets and achieve state-of-the-art results on all of them, surpassing the performance of all existing works with a margin exceeding 4% in some cases. We additionally show that Relational Proxies also generalizes to the zero-shot setting, where it can efficiently leverage emergent relationships among attributes and image views to generalize to unseen categories, surpassing current state-of-the-art in both the non-generative and generative settings.

@article{chaudhuri2024relational, title = {Relational Proxies: Fine-Grained Relationships as Zero-Shot Discriminators}, author = {Chaudhuri, Abhra and Mancini, Massimiliano and Akata, Zeynep and Dutta, Anjan}, journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence}, year = {2024}, volume = {46}, number = {12}, pages = {8653-8664}, doi = {10.1109/TPAMI.2024.3408913}, } -

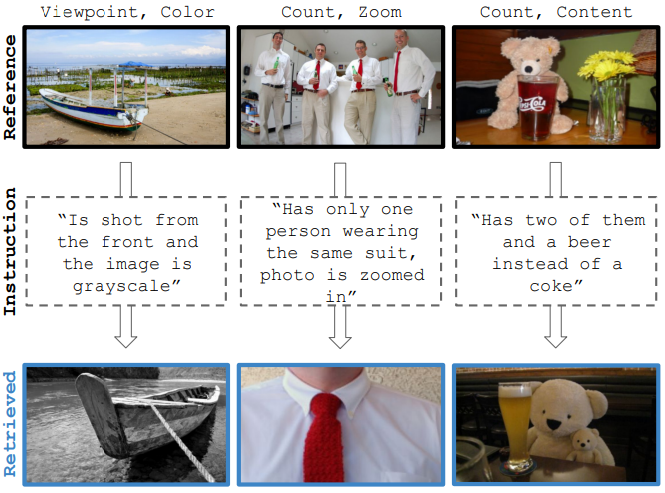

Vision-by-Language for Training-Free Compositional Image RetrievalShyamgopal Karthik , Karsten Roth , Massimiliano Mancini, and 1 more authorIn International Conference on Learning Representations (ICLR) , Oct 2024

Vision-by-Language for Training-Free Compositional Image RetrievalShyamgopal Karthik , Karsten Roth , Massimiliano Mancini, and 1 more authorIn International Conference on Learning Representations (ICLR) , Oct 2024Given an image and a target modification (e.g an image of the Eiffel tower and the text “without people and at night-time”), Compositional Image Retrieval (CIR) aims to retrieve the relevant target image in a database. While supervised approaches rely on annotating triplets that is costly (i.e. query image, textual modification, and target image), recent research sidesteps this need by using large-scale vision-language models (VLMs), performing Zero-Shot CIR (ZS-CIR). However, state-of-the-art approaches in ZS-CIR still require training task-specific, customized models over large amounts of image-text pairs. In this work, we proposeto tackle CIR in a training-free manner via our Compositional Image Retrieval through Vision-by-Language (CIReVL), a simple, yet human-understandable and scalable pipeline that effectively recombines large-scale VLMs with large language models (LLMs). By captioning the reference image using a pre-trained generative VLM and asking a LLM to recompose the caption based on the textual target modification for subsequent retrieval via e.g. CLIP, we achieve modular language reasoning. In four ZS-CIR benchmarks, we find competitive, in-part state-of-the-art performance - improving over supervised methods Moreover, the modularity of CIReVL offers simple scalability without re-training, allowing us to both investigate scaling laws and bottlenecks for ZS-CIR while easily scaling up to in parts more than double of previously reported results. Finally, we show that CIReVL makes CIR human-understandable by composing image and text in a modular fashion in the language domain, thereby making it intervenable, allowing to post-hoc re-align failure cases. Code will be released upon acceptance.

@inproceedings{Karthik_2024_ICLR, author = {Karthik, Shyamgopal and Roth, Karsten and Mancini, Massimiliano and Akata, Zeynep}, title = {Vision-by-Language for Training-Free Compositional Image Retrieval}, booktitle = {International Conference on Learning Representations (ICLR)}, year = {2024}, } -

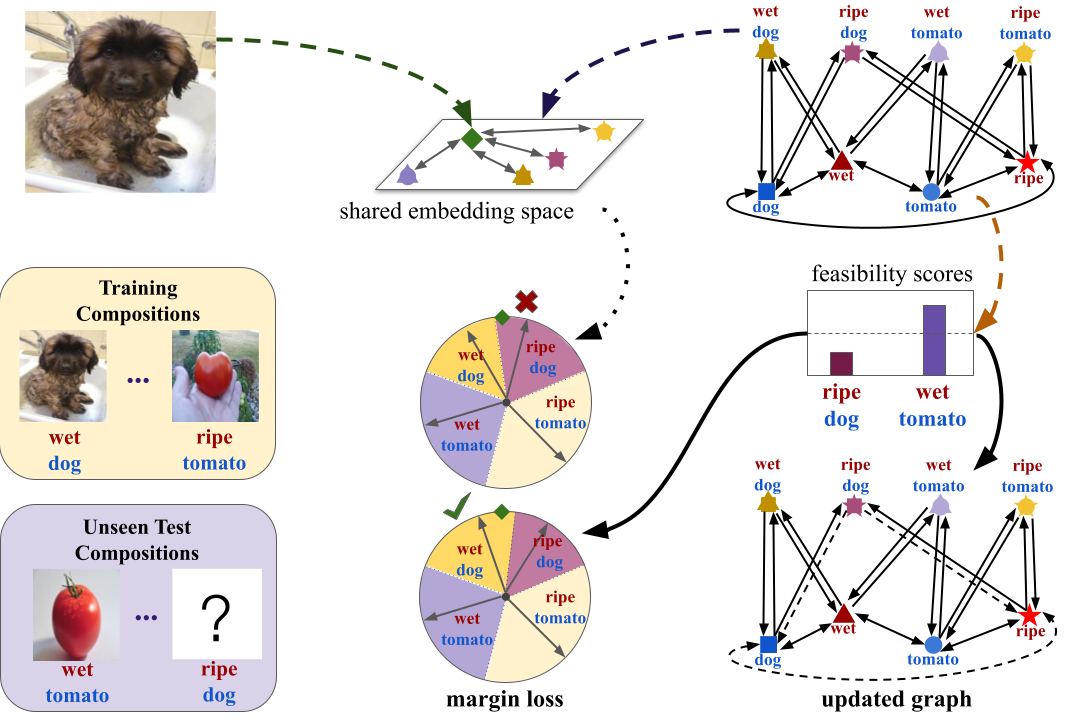

Learning Graph Embeddings for Open World Compositional Zero-Shot LearningMassimiliano Mancini, Muhammad Ferjad Naeem , Yongqin Xian , and 1 more authorIEEE Transactions on Pattern Analysis and Machine Intelligence, Oct 2024

Learning Graph Embeddings for Open World Compositional Zero-Shot LearningMassimiliano Mancini, Muhammad Ferjad Naeem , Yongqin Xian , and 1 more authorIEEE Transactions on Pattern Analysis and Machine Intelligence, Oct 2024Compositional Zero-Shot learning (CZSL) aims to recognize unseen compositions of state and object visual primitives seen during training. A problem with standard CZSL is the assumption of knowing which unseen compositions will be available at test time. In this work, we overcome this assumption operating on the open world setting, where no limit is imposed on the compositional space at test time, and the search space contains a large number of unseen compositions. To address this problem, we propose a new approach, Compositional Cosine Graph Embeddings (Co-CGE), based on two principles. First, Co-CGE models the dependency between states, objects and their compositions through a graph convolutional neural network. The graph propagates information from seen to unseen concepts, improving their representations. Second, since not all unseen compositions are equally feasible, and less feasible ones may damage the learned representations, Co-CGE estimates a feasibility score for each unseen composition, using the scores as margins in a cosine similarity-based loss and as weights in the adjacency matrix of the graphs. Experiments show that our approach achieves state-of-the-art performances in standard CZSL while outperforming previous methods in the open world scenario.

@article{9745371, author = {Mancini, Massimiliano and Naeem, Muhammad Ferjad and Xian, Yongqin and Akata, Zeynep}, journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence}, title = {Learning Graph Embeddings for Open World Compositional Zero-Shot Learning}, year = {2024}, volume = {46}, number = {3}, pages = {1545-1560}, doi = {10.1109/TPAMI.2022.3163667}, } -

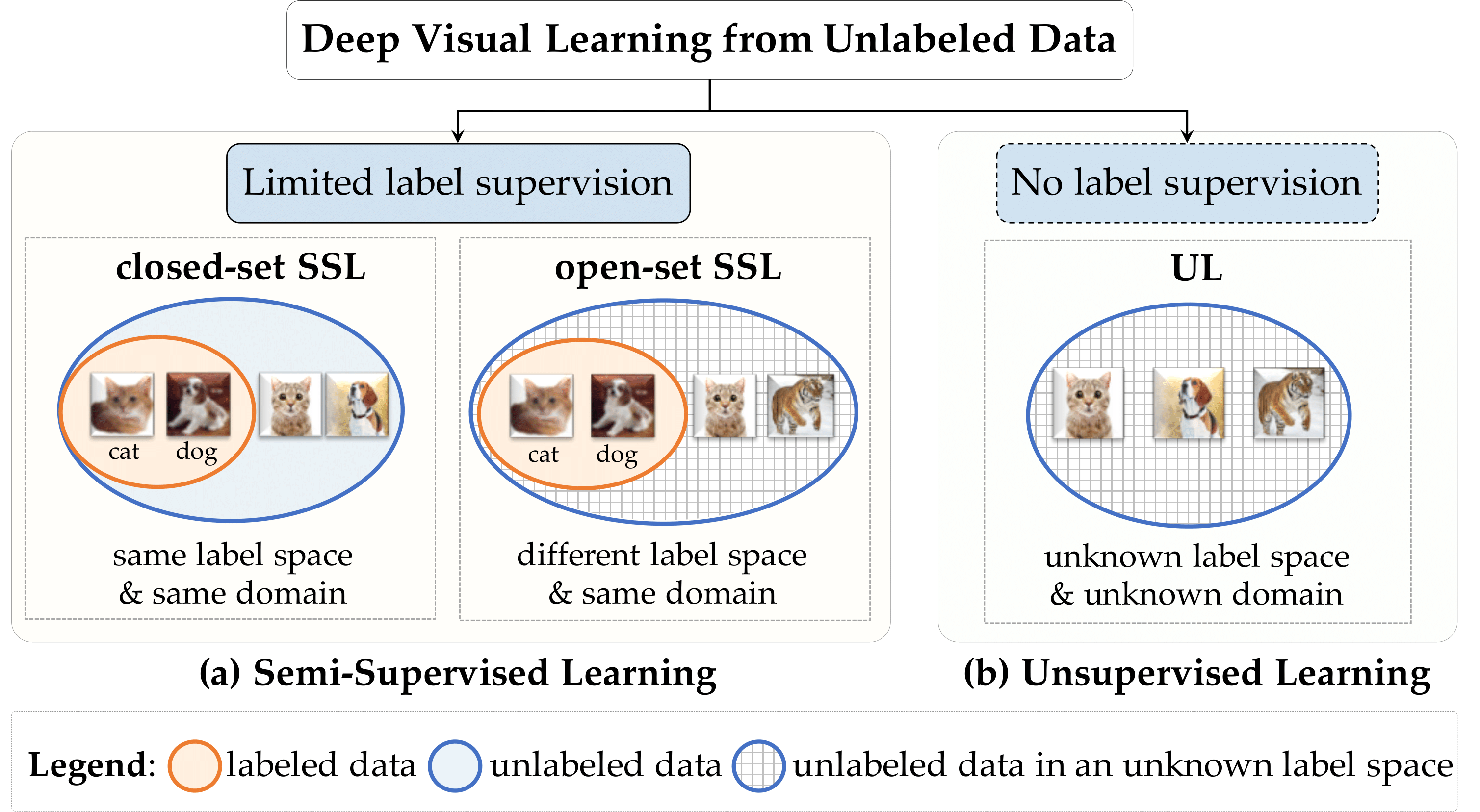

Semi-Supervised and Unsupervised Deep Visual Learning: A SurveyYanbei Chen , Massimiliano Mancini, Xiatian Zhu , and 1 more authorIEEE Transactions on Pattern Analysis and Machine Intelligence, Oct 2024

Semi-Supervised and Unsupervised Deep Visual Learning: A SurveyYanbei Chen , Massimiliano Mancini, Xiatian Zhu , and 1 more authorIEEE Transactions on Pattern Analysis and Machine Intelligence, Oct 2024State-of-the-art deep learning models are often trained with a large amount of costly labeled training data. However, requiring exhaustive manual annotations may degrade the model’s generalizability in the limited-label regime.Semi-supervised learning and unsupervised learning offer promising paradigms to learn from an abundance of unlabeled visual data. Recent progress in these paradigms has indicated the strong benefits of leveraging unlabeled data to improve model generalization and provide better model initialization. In this survey, we review the recent advanced deep learning algorithms on semi-supervised learning (SSL) and unsupervised learning (UL) for visual recognition from a unified perspective. To offer a holistic understanding of the state-of-the-art in these areas, we propose a unified taxonomy. We categorize existing representative SSL and UL with comprehensive and insightful analysis to highlight their design rationales in different learning scenarios and applications in different computer vision tasks. Lastly, we discuss the emerging trends and open challenges in SSL and UL to shed light on future critical research directions.

@article{chen2022semi, title = {Semi-Supervised and Unsupervised Deep Visual Learning: A Survey}, author = {Chen, Yanbei and Mancini, Massimiliano and Zhu, Xiatian and Akata, Zeynep}, journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence}, year = {2024}, volume = {46}, number = {3}, pages = {61327-1347}, publisher = {IEEE}, }

2023

-

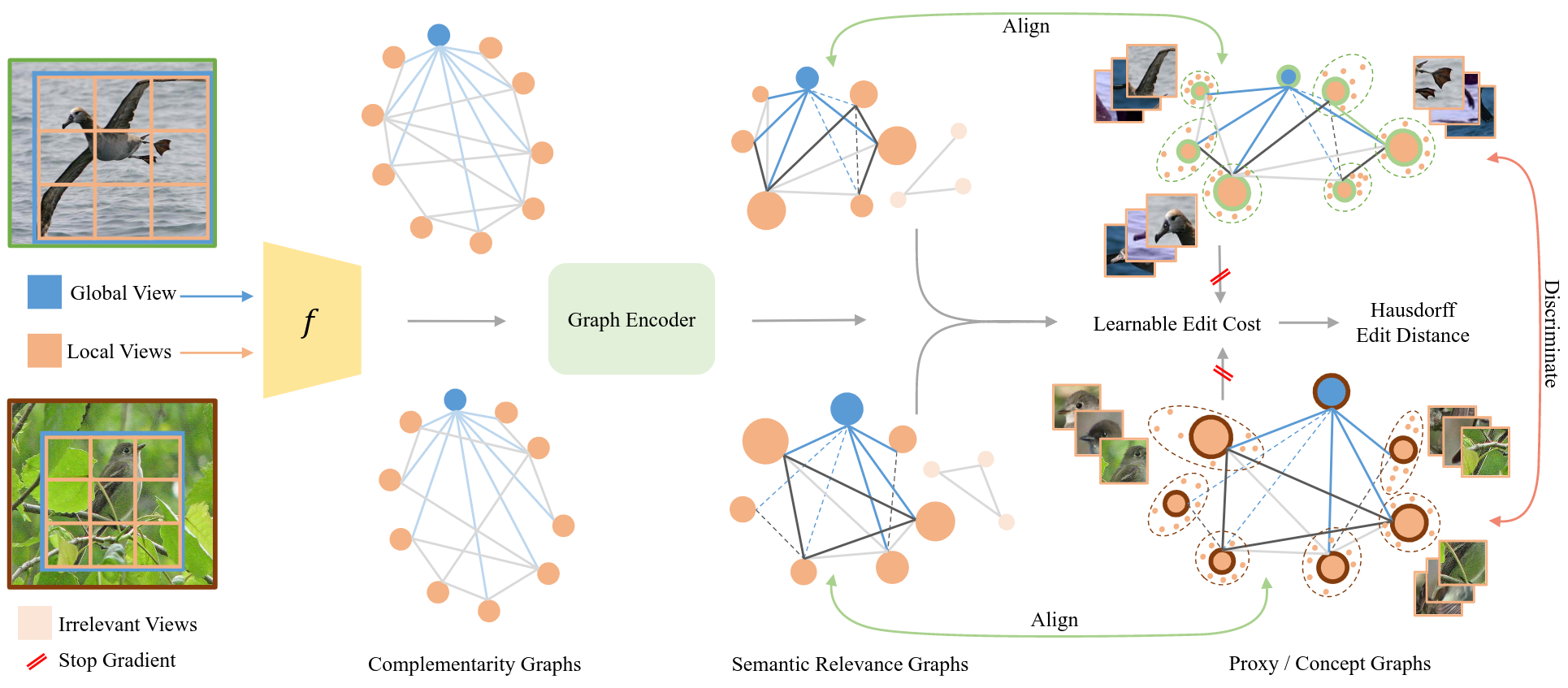

Transitivity Recovering Decompositions: Interpretable and Robust Fine-Grained RelationshipsAbhra Chaudhuri , Massimiliano Mancini, Zeynep Akata , and 1 more authorIn Thirty-seventh Conference on Neural Information Processing Systems , Oct 2023

Transitivity Recovering Decompositions: Interpretable and Robust Fine-Grained RelationshipsAbhra Chaudhuri , Massimiliano Mancini, Zeynep Akata , and 1 more authorIn Thirty-seventh Conference on Neural Information Processing Systems , Oct 2023Recent advances in fine-grained representation learning leverage local-to-global (emergent) relationships for achieving state-of-the-art results. The relational representations relied upon by such methods, however, are abstract. We aim to deconstruct this abstraction by expressing them as interpretable graphs over image views. We begin by theoretically showing that abstract relational representations are nothing but a way of recovering transitive relationships among local views. Based on this, we design Transitivity Recovering Decompositions (TRD), a graph-space search algorithm that identifies interpretable equivalents of abstract emergent relationships at both instance and class levels, and with no post-hoc computations. We additionally show that TRD is provably robust to noisy views, with empirical evidence also supporting this finding. The latter allows TRD to perform at par or even better than the state-of-the-art, while being fully interpretable.

@inproceedings{chaudhuri2023transitivity, title = {Transitivity Recovering Decompositions: Interpretable and Robust Fine-Grained Relationships}, author = {Chaudhuri, Abhra and Mancini, Massimiliano and Akata, Zeynep and Dutta, Anjan}, booktitle = {Thirty-seventh Conference on Neural Information Processing Systems}, year = {2023}, } -

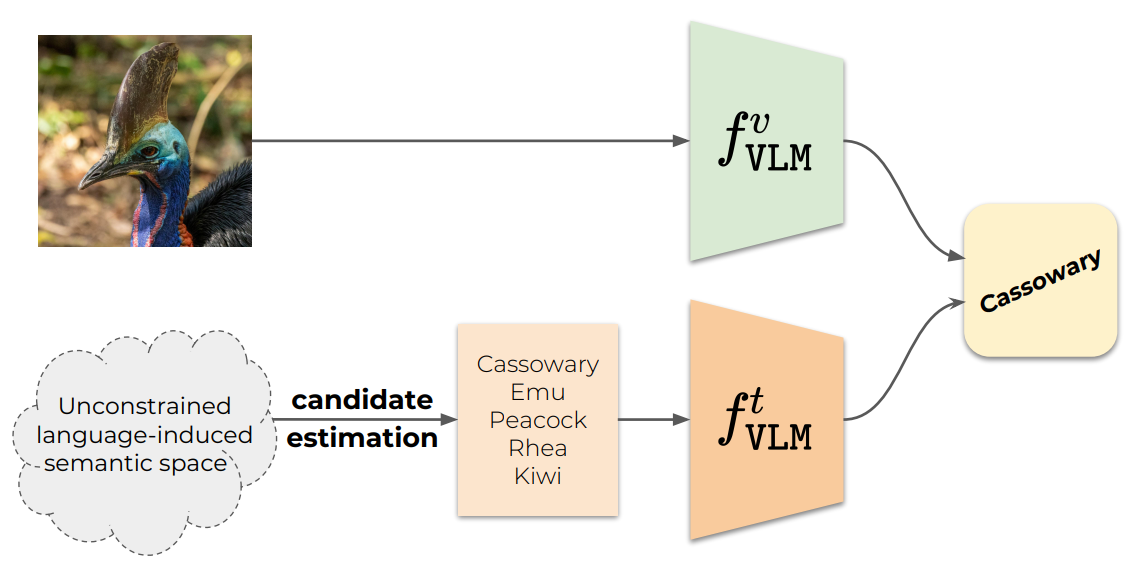

Vocabulary-free Image ClassificationAlessandro Conti , Enrico Fini , Massimiliano Mancini, and 3 more authorsAdvances in Neural Information Processing Systems (NeurIPS), Oct 2023

Vocabulary-free Image ClassificationAlessandro Conti , Enrico Fini , Massimiliano Mancini, and 3 more authorsAdvances in Neural Information Processing Systems (NeurIPS), Oct 2023Recent advances in large vision-language models have revolutionized the image classification paradigm. Despite showing impressive zero-shot capabilities, a pre-defined set of categories, a.k.a. the vocabulary, is assumed at test time for composing the textual prompts. However, such assumption can be impractical when the semantic context is unknown and evolving. We thus formalize a novel task, termed as Vocabulary-free Image Classification (VIC), where we aim to assign to an input image a class that resides in an unconstrained language-induced semantic space, without the prerequisite of a known vocabulary. VIC is a challenging task as the semantic space is extremely large, containing millions of concepts, with hard-to-discriminate fine-grained categories.

@article{conti2023vocabulary, title = {Vocabulary-free Image Classification}, author = {Conti, Alessandro and Fini, Enrico and Mancini, Massimiliano and Rota, Paolo and Wang, Yiming and Ricci, Elisa}, journal = {Advances in Neural Information Processing Systems (NeurIPS)}, year = {2023}, } -

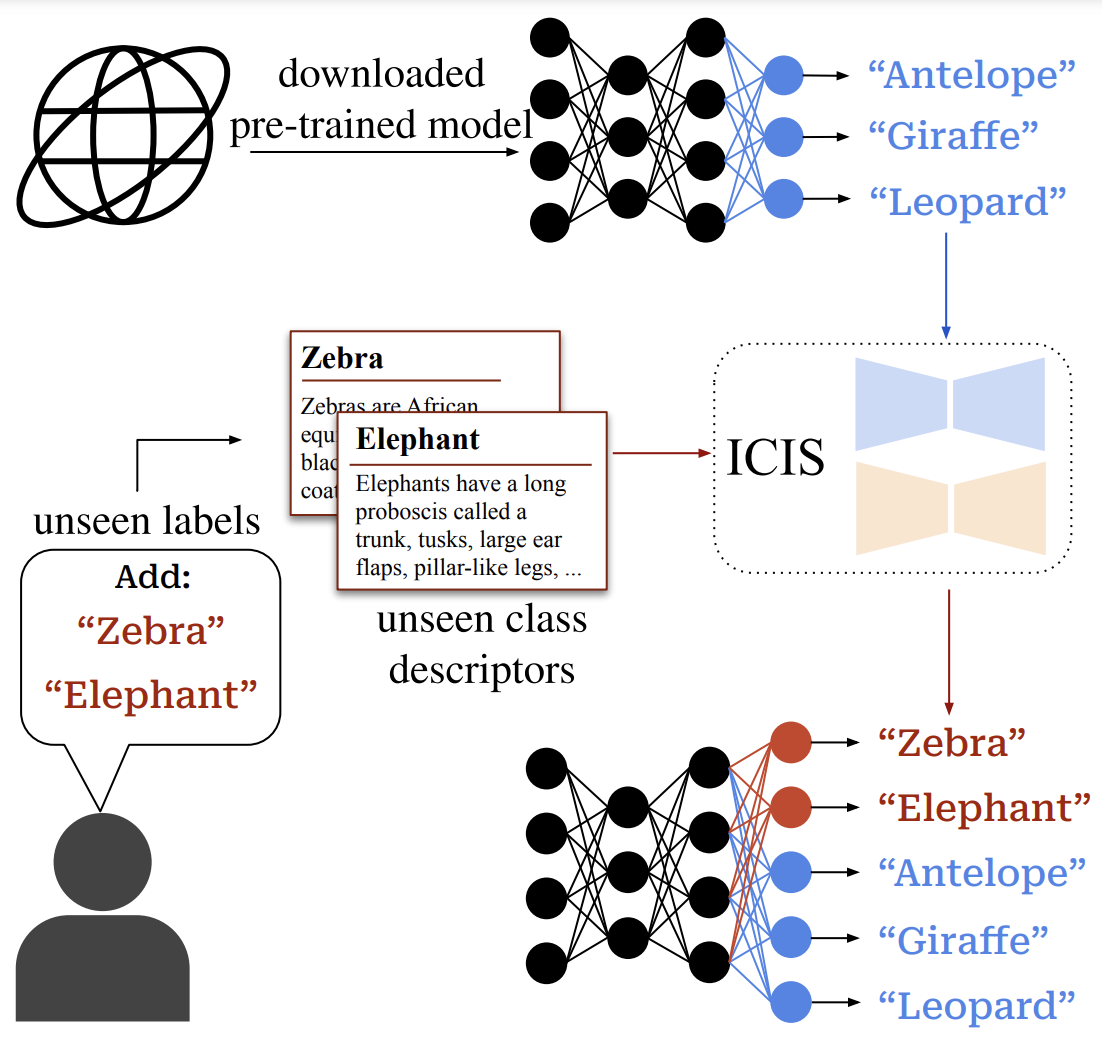

Image-free Classifier Injection for Zero-Shot ClassificationAnders Christensen , Massimiliano Mancini, A. Sophia Koepke , and 2 more authorsIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023

Image-free Classifier Injection for Zero-Shot ClassificationAnders Christensen , Massimiliano Mancini, A. Sophia Koepke , and 2 more authorsIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023Zero-shot learning models achieve remarkable results on image classification for samples from classes that were not seen during training. However, such models must be trained from scratch with specialised methods: therefore, access to a training dataset is required when the need for zero-shot classification arises. In this paper, we aim to equip pre-trained models with zero-shot classification capabilities without the use of image data. We achieve this with our proposed Image-free Classifier Injection with Semantics (ICIS) that injects classifiers for new, unseen classes into pre-trained classification models in a post-hoc fashion without relying on image data. Instead, the existing classifier weights and simple class-wise descriptors, such as class names or attributes, are used. ICIS has two encoder-decoder networks that learn to reconstruct classifier weights from descriptors (and vice versa), exploiting (cross-)reconstruction and cosine losses to regularise the decoding process. Notably, ICIS can be cheaply trained and applied directly on top of pre-trained classification models. Experiments on benchmark ZSL datasets show that ICIS produces unseen classifier weights that achieve strong (generalised) zero-shot classification performance.

@inproceedings{christensen2023image, title = {Image-free Classifier Injection for Zero-Shot Classification}, author = {Christensen, Anders and Mancini, Massimiliano and Koepke, A. Sophia and Winther, Ole and Akata, Zeynep}, booktitle = {Proceedings of the International Conference on Computer Vision (ICCV) 2023}, year = {2023}, } -

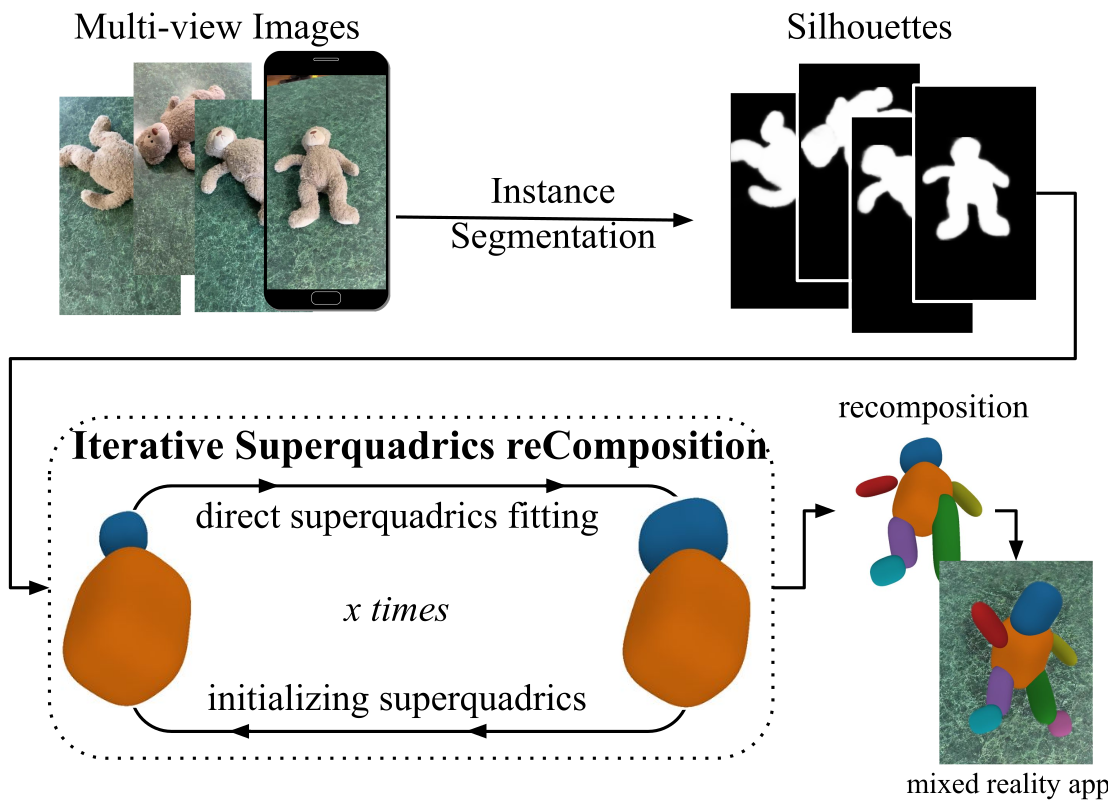

Iterative Superquadric Recomposition of 3D Objects from Multiple ViewsStephan Alaniz , Massimiliano Mancini, and Zeynep AkataIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023

Iterative Superquadric Recomposition of 3D Objects from Multiple ViewsStephan Alaniz , Massimiliano Mancini, and Zeynep AkataIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023Humans are good at recomposing novel objects, i.e they can identify commonalities between unknown objects from general structure to finer detail, an ability difficult to replicate by machines. We propose a framework, ISCO, to recompose an object using 3D superquadrics as semantic parts directly from 2D views without training a model that uses 3D supervision. To achieve this, we optimize the superquadric parameters that compose a specific instance of the object, comparing its rendered 3D view and 2D image silhouette. Our ISCO framework iteratively adds new superquadrics wherever the reconstruction error is high, abstracting first coarse regions and then finer details of the target object. With this simple coarse-to-fine inductive bias, ISCO provides consistent superquadrics for related object parts, despite not having any semantic supervision. Since ISCO does not train any neural network, it is also inherently robust to out of distribution objects. Experiments show that, compared to recent single instance superquadrics reconstruction approaches, ISCO provides consistently more accurate 3D reconstructions, even from images in the wild.

@inproceedings{alaniz2023iterative, title = {Iterative Superquadric Recomposition of 3D Objects from Multiple Views}, author = {Alaniz, Stephan and Mancini, Massimiliano and Akata, Zeynep}, booktitle = {Proceedings of the International Conference on Computer Vision (ICCV) 2023}, year = {2023}, } -

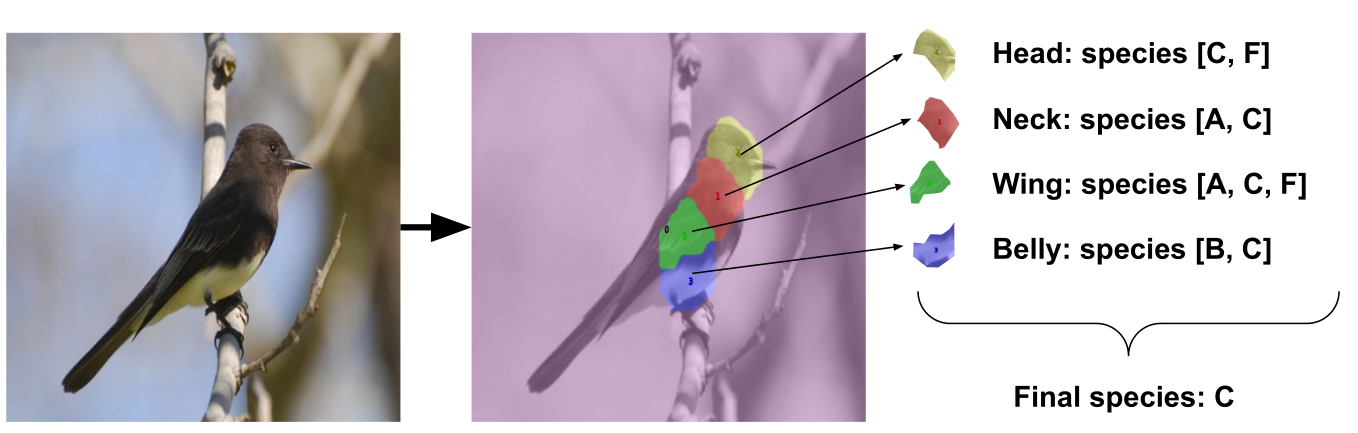

PDiscoNet: Semantically consistent part discovery for fine-grained recognitionRobert Klis , Stephan Alaniz , Massimiliano Mancini, and 4 more authorsIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023

PDiscoNet: Semantically consistent part discovery for fine-grained recognitionRobert Klis , Stephan Alaniz , Massimiliano Mancini, and 4 more authorsIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023Fine-grained classification often requires recognizing specific object parts, such as beak shape and wing patterns for birds. Encouraging a fine-grained classification model to first detect such parts and then using them to infer the class could help us gauge whether the model is indeed looking at the right details better than with interpretability methods that provide a single attribution map. We propose PDiscoNet to discover object parts by using only image-level class labels along with priors encouraging the parts to be: discriminative, compact, distinct from each other, equivariant to rigid transforms, and active in at least some of the images. In addition to using the appropriate losses to encode these priors, we propose to use part-dropout, where full part feature vectors are dropped at once to prevent a single part from dominating in the classification, and part feature vector modulation, which makes the information coming from each part distinct from the perspective of the classifier. Our results on CUB, CelebA, and PartImageNet show that the proposed method provides substantially better part discovery performance than previous methods while not requiring any additional hyper-parameter tuning and without penalizing the classification performance.

@inproceedings{vanderklis2023pdisconet, title = {PDiscoNet: Semantically consistent part discovery for fine-grained recognition}, author = {van der Klis, Robert and Alaniz, Stephan and Mancini, Massimiliano and Dantas, Cassio F. and Ienco, Dino and Akata, Zeynep and Marcos, Diego}, booktitle = {Proceedings of the International Conference on Computer Vision (ICCV) 2023}, year = {2023}, } -

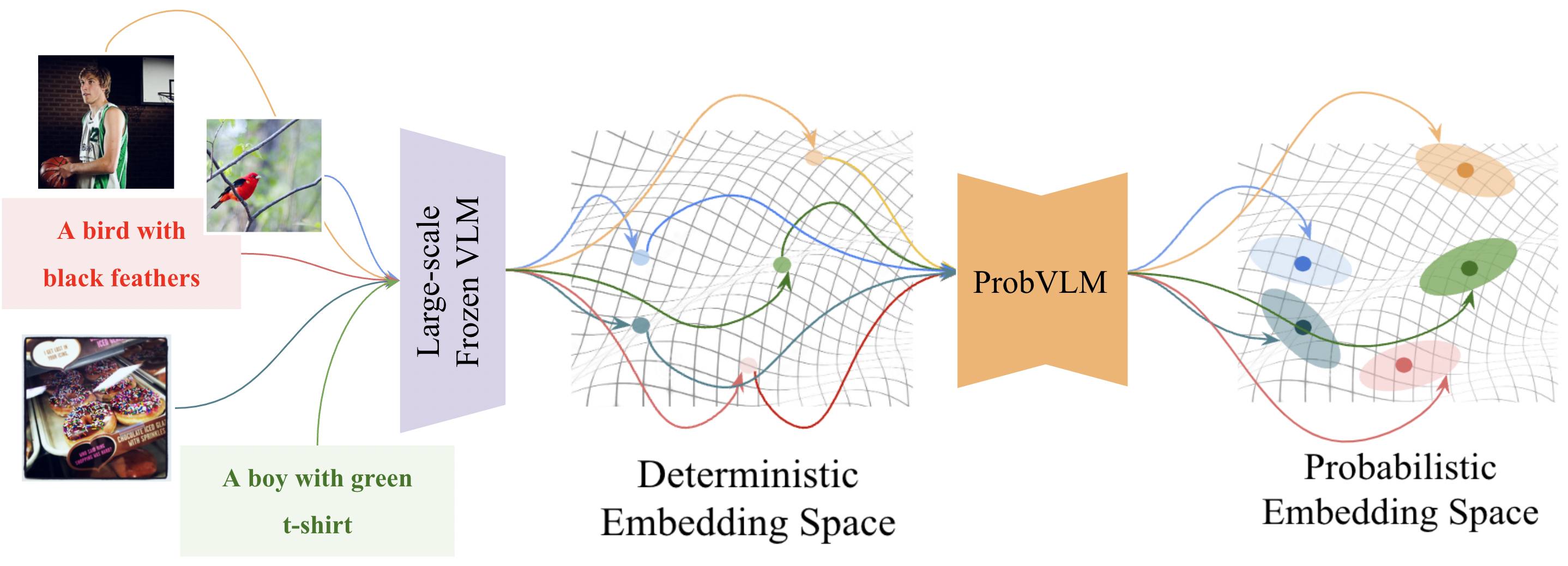

ProbVLM: Probabilistic Adapter for Frozen Vison-Language ModelsUddeshya Upadhyay , Shyamgopal Karthik , Massimiliano Mancini, and 1 more authorIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023

ProbVLM: Probabilistic Adapter for Frozen Vison-Language ModelsUddeshya Upadhyay , Shyamgopal Karthik , Massimiliano Mancini, and 1 more authorIn Proceedings of the International Conference on Computer Vision (ICCV) 2023 , Oct 2023Large-scale vision-language models (VLMs) like CLIP successfully find correspondences between images and text. Through the standard deterministic mapping process, an image or a text sample is mapped to a single vector in the embedding space. This is problematic: as multiple samples (images or text) can abstract the same concept in the physical world, deterministic embeddings do not reflect the inherent ambiguity in the embedding space. We propose ProbVLM, a probabilistic adapter that estimates probability distributions for the embeddings of pre-trained VLMs via inter/intra-modal alignment in a post-hoc manner without needing large-scale datasets or computing. On four challenging datasets, i.e., COCO, Flickr, CUB, and Oxford-flowers, we estimate the multi-modal embedding uncertainties for two VLMs, i.e., CLIP and BLIP, quantify the calibration of embedding uncertainties in retrieval tasks and show that ProbVLM outperforms other methods. Furthermore, we propose active learning and model selection as two real-world downstream tasks for VLMs and show that the estimated uncertainty aids both tasks. Lastly, we present a novel technique for visualizing the embedding distributions using a large-scale pre-trained latent diffusion model.

@inproceedings{upadhyay2023probvlm, title = {ProbVLM: Probabilistic Adapter for Frozen Vison-Language Models}, author = {Upadhyay, Uddeshya and Karthik, Shyamgopal and Mancini, Massimiliano and Akata, Zeynep}, booktitle = {Proceedings of the International Conference on Computer Vision (ICCV) 2023}, year = {2023}, } -

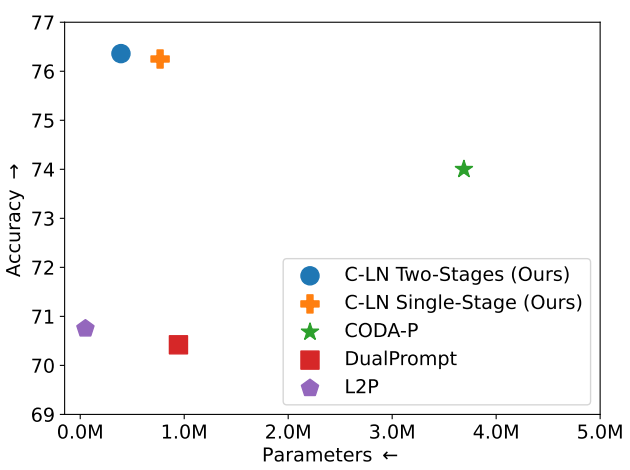

On the Effectiveness of LayerNorm Tuning for Continual Learning in Vision TransformersThomas De Min , Massimiliano Mancini, Karteek Alahari , and 2 more authorsIn The First Workshop on Visual Continual Learning at ICCV 2023 , Oct 2023

On the Effectiveness of LayerNorm Tuning for Continual Learning in Vision TransformersThomas De Min , Massimiliano Mancini, Karteek Alahari , and 2 more authorsIn The First Workshop on Visual Continual Learning at ICCV 2023 , Oct 2023State-of-the-art rehearsal-free continual learning methods exploit the peculiarities of Vision Transformers to learn task-specific prompts, drastically reducing catastrophic forgetting. However, there is a tradeoff between the number of learned parameters and the performance, making such models computationally expensive. In this work, we aim to reduce this cost while maintaining competitive performance. We achieve this by revisiting and extending a simple transfer learning idea: learning task-specific normalization layers. Specifically, we tune the scale and bias parameters of LayerNorm for each continual learning task, selecting them at inference time based on the similarity between task-specific keys and the output of the pre-trained model. To make the classifier robust to incorrect selection of parameters during inference, we introduce a two-stage training procedure, where we first optimize the task-specific parameters and then train the classifier with the same selection procedure of the inference time. Experiments on ImageNet-R and CIFAR-100 show that our method achieves results that are either superior or on par with the state of the art while being computationally cheaper.

@inproceedings{demin2023effectiveness, title = {On the Effectiveness of LayerNorm Tuning for Continual Learning in Vision Transformers}, author = {De Min, Thomas and Mancini, Massimiliano and Alahari, Karteek and Alameda-Pineda, Xavier and Ricci, Elisa}, booktitle = {The First Workshop on Visual Continual Learning at ICCV 2023}, year = {2023}, }

2022

- Attention Consistency on Visual Corruptions for Single-Source Domain GeneralizationIlke Cugu , Massimiliano Mancini, Yanbei Chen , and 1 more authorIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops , Jun 2022

Generalizing visual recognition models trained on a single distribution to unseen input distributions (i.e. domains) requires making them robust to superfluous correlations in the training set. In this work, we achieve this goal by altering the training images to simulate new domains and imposing consistent visual attention across the different views of the same sample. We discover that the first objective can be simply and effectively met through visual corruptions. Specifically, we alter the content of the training images using the nineteen corruptions of the ImageNet-C benchmark and three additional transformations based on Fourier transform. Since these corruptions preserve object locations, we propose an attention consistency loss to ensure that class activation maps across original and corrupted versions of the same training sample are aligned. We name our model Attention Consistency on Visual Corruptions (ACVC). We show that ACVC consistently achieves the state of the art on three single-source domain generalization benchmarks, PACS, COCO, and the large-scale DomainNet.

@inproceedings{Cugu_2022_CVPR, author = {Cugu, Ilke and Mancini, Massimiliano and Chen, Yanbei and Akata, Zeynep}, title = {Attention Consistency on Visual Corruptions for Single-Source Domain Generalization}, booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops}, month = jun, year = {2022}, } -

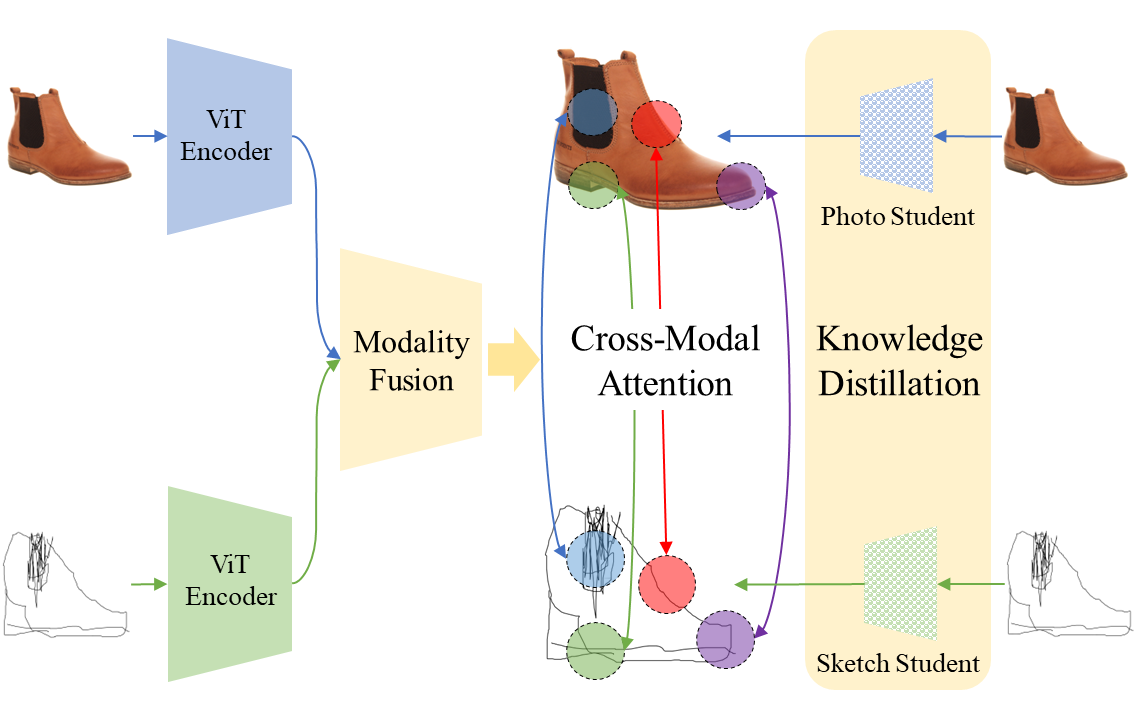

Cross-Modal Fusion Distillation for Fine-Grained Sketch-Based Image RetrievalAbhra Chaudhuri , Massimiliano Mancini, Yanbei Chen , and 2 more authorsIn British Machine Vision Conference , Jun 2022

Cross-Modal Fusion Distillation for Fine-Grained Sketch-Based Image RetrievalAbhra Chaudhuri , Massimiliano Mancini, Yanbei Chen , and 2 more authorsIn British Machine Vision Conference , Jun 2022Representation learning for sketch-based image retrieval has mostly been tackled by learning embeddings that discard modality-specific information. As instances from different modalities can often provide complementary information describing the underlying concept, we propose a cross-attention framework for Vision Transformers (XModalViT) that fuses modality-specific information instead of discarding them. Our framework first maps paired datapoints from the individual photo and sketch modalities to fused representations that unify information from both modalities. We then decouple the input space of the aforementioned modality fusion network into independent encoders of the individual modalities via contrastive and relational cross-modal knowledge distillation. Such encoders can then be applied to downstream tasks like cross-modal retrieval. We demonstrate the expressive capacity of the learned representations by performing a wide range of experiments and achieving state-of-the-art results on three fine-grained sketch-based image retrieval benchmarks: Shoe-V2, Chair-V2 and Sketchy.

@inproceedings{chaudhuri2022cross, title = {Cross-Modal Fusion Distillation for Fine-Grained Sketch-Based Image Retrieval}, author = {Chaudhuri, Abhra and Mancini, Massimiliano and Chen, Yanbei and Akata, Zeynep and Dutta, Anjan}, booktitle = {British Machine Vision Conference}, year = {2022}, } -

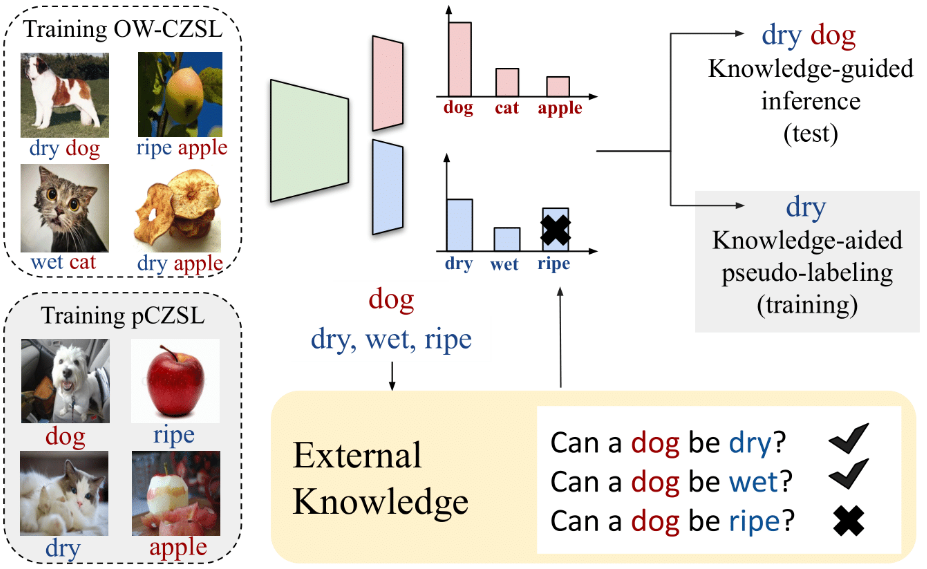

KG-SP: Knowledge Guided Simple Primitives for Open World Compositional Zero-Shot LearningShyamgopal Karthik , Massimiliano Mancini, and Zeynep AkataIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , Jun 2022

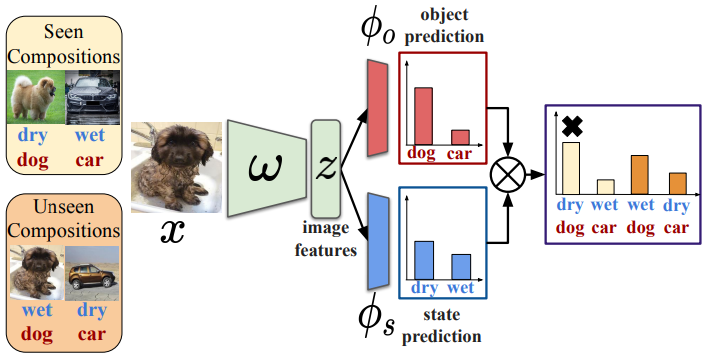

KG-SP: Knowledge Guided Simple Primitives for Open World Compositional Zero-Shot LearningShyamgopal Karthik , Massimiliano Mancini, and Zeynep AkataIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , Jun 2022The goal of open-world compositional zero-shot learning (OW-CZSL) is to recognize compositions of state and objects in images, given only a subset of them during training and no prior on the unseen compositions. In this setting, models operate on a huge output space, containing all possible state-object compositions. While previous works tackle the problem by learning embeddings for the compositions jointly, here we revisit a simple CZSL baseline and predict the primitives, ie states and objects, independently. To ensure that the model develops primitive-specific features, we equip the state and object classifiers with separate, non-linear feature extractors. Moreover, we estimate the feasibility of each composition through external knowledge, using this prior to remove unfeasible compositions from the output space. Finally, we propose a new setting, ie CZSL under partial supervision (pCZSL), where either only objects or state labels are available during training and we can use our prior to estimate the missing labels. Our model, Knowledge-Guided Simple Primitives (KG-SP), achieves the state of the art in both OW-CZSL and pCZSL, surpassing most recent competitors even when coupled with semi-supervised learning techniques

@inproceedings{Karthik_2022_CVPR, author = {Karthik, Shyamgopal and Mancini, Massimiliano and Akata, Zeynep}, title = {KG-SP: Knowledge Guided Simple Primitives for Open World Compositional Zero-Shot Learning}, booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, year = {2022}, } -

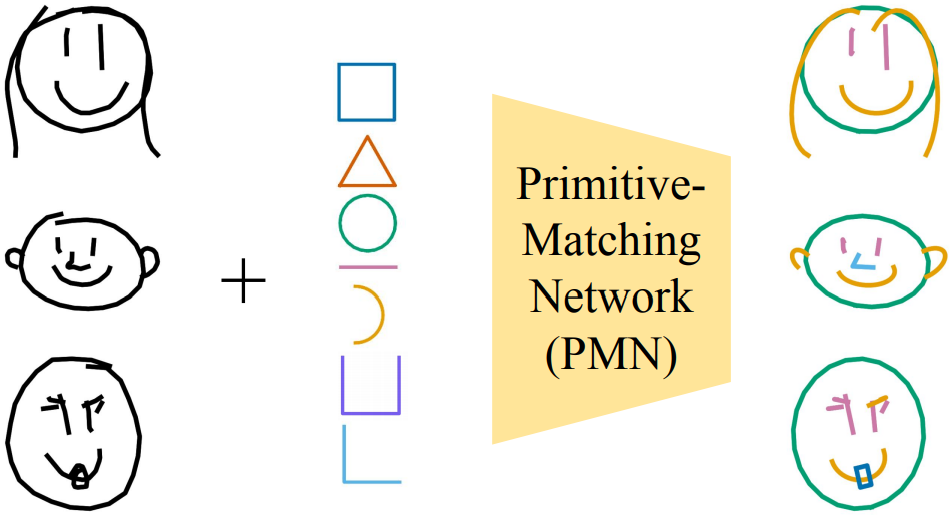

Abstracting Sketches through Simple PrimitivesStephan Alaniz , Massimiliano Mancini, Anjan Dutta , and 2 more authorsIn Proceedings of the European Conference on Computer Vision (ECCV) 2022 , Jun 2022

Abstracting Sketches through Simple PrimitivesStephan Alaniz , Massimiliano Mancini, Anjan Dutta , and 2 more authorsIn Proceedings of the European Conference on Computer Vision (ECCV) 2022 , Jun 2022Humans show high-level of abstraction capabilities in games that require quickly communicating object information. They decompose the message content into multiple parts and communicate them in an interpretable protocol. Toward equipping machines with such capabilities, we propose the Primitive-based Sketch Abstraction task where the goal is to represent sketches using a fixed set of drawing primitives under the influence of a budget. To solve this task, our PrimitiveMatching Network (PMN), learns interpretable abstractions of a sketch in a self supervised manner. Specifically, PMN maps each stroke of a sketch to its most similar primitive in a given set, predicting an affine transformation that aligns the selected primitive to the target stroke. We learn this stroke-to-primitive mapping end-to-end with a distancetransform loss that is minimal when the original sketch is precisely reconstructed with the predicted primitives. Our PMN abstraction empirically achieves the highest performance on sketch recognition and sketch-based image retrieval given a communication budget, while at the same time being highly interpretable. This opens up new possibilities for sketch analysis, such as comparing sketches by extracting the most relevant primitives that define an object category.

@inproceedings{alaniz2022abstracting, title = {Abstracting Sketches through Simple Primitives}, author = {Alaniz, Stephan and Mancini, Massimiliano and Dutta, Anjan and Marcos, Diego and Akata, Zeynep}, booktitle = {Proceedings of the European Conference on Computer Vision (ECCV) 2022}, year = {2022}, } -

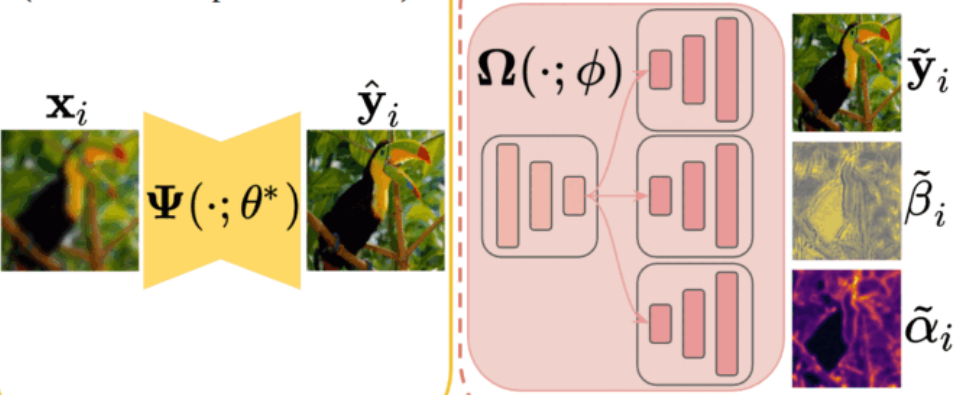

BayesCap: Bayesian Identity Cap for Calibrated Uncertainty in Frozen Neural NetworksUddeshya Upadhyay , Shyamgopal Karthik , Yanbei Chen , and 2 more authorsIn Proceedings of the European Conference on Computer Vision (ECCV) 2022 , Jun 2022

BayesCap: Bayesian Identity Cap for Calibrated Uncertainty in Frozen Neural NetworksUddeshya Upadhyay , Shyamgopal Karthik , Yanbei Chen , and 2 more authorsIn Proceedings of the European Conference on Computer Vision (ECCV) 2022 , Jun 2022High-quality calibrated uncertainty estimates are crucial for numerous real-world applications, especially for deep learning-based deployed ML systems. While Bayesian deep learning techniques allow uncertainty estimation, training them with large-scale datasets is an expensive process that does not always yield models competitive with non-Bayesian counterparts. Moreover, many of the high-performing deep learning models that are already trained and deployed are non-Bayesian in nature and do not provide uncertainty estimates. To address these issues, we propose BayesCap that learns a Bayesian identity mapping for the frozen model, allowing uncertainty estimation. BayesCap is a memory-efficient method that can be trained on a small fraction of the original dataset, enhancing pretrained non-Bayesian computer vision models by providing calibrated uncertainty estimates for the predictions without (i) hampering the performance of the model and (ii) the need for expensive retraining the model from scratch. The proposed method is agnostic to various architectures and tasks. We show the efficacy of our method on a wide variety of tasks with a diverse set of architectures, including image super-resolution, deblurring, inpainting, and crucial application such as medical image translation. Moreover, we apply the derived uncertainty estimates to detect out-of-distribution samples in critical scenarios like depth estimation in autonomous driving.

@inproceedings{upadhyay2022bayescap, title = {BayesCap: Bayesian Identity Cap for Calibrated Uncertainty in Frozen Neural Networks}, author = {Upadhyay, Uddeshya and Karthik, Shyamgopal and Chen, Yanbei and Mancini, Massimiliano and Akata, Zeynep}, booktitle = {Proceedings of the European Conference on Computer Vision (ECCV) 2022}, year = {2022}, } -

Relational Proxies: Emergent Relationships as Fine-Grained DiscriminatorsAbhra Chaudhuri , Massimiliano Mancini, Zeynep Akata , and 1 more authorAdvances in Neural Information Processing Systems (NeurIPS), Jun 2022

Relational Proxies: Emergent Relationships as Fine-Grained DiscriminatorsAbhra Chaudhuri , Massimiliano Mancini, Zeynep Akata , and 1 more authorAdvances in Neural Information Processing Systems (NeurIPS), Jun 2022Fine-grained categories that largely share the same set of parts cannot be discriminated based on part information alone, as they mostly differ in the way the local parts relate to the overall global structure of the object. We propose Relational Proxies, a novel approach that leverages the relational information between the global and local views of an object for encoding its semantic label. Starting with a rigorous formalization of the notion of distinguishability between fine-grained categories, we prove the necessary and sufficient conditions that a model must satisfy in order to learn the underlying decision boundaries in the fine-grained setting. We design Relational Proxies based on our theoretical findings and evaluate it on seven challenging fine-grained benchmark datasets and achieve state-of-the-art results on all of them, surpassing the performance of all existing works with a margin exceeding 4% in some cases. We also experimentally validate our theory on fine-grained distinguishability and obtain consistent results across multiple benchmarks.

@article{chaudhuri2022relational, title = {Relational Proxies: Emergent Relationships as Fine-Grained Discriminators}, author = {Chaudhuri, Abhra and Mancini, Massimiliano and Akata, Zeynep and Dutta, Anjan}, journal = {Advances in Neural Information Processing Systems (NeurIPS)}, year = {2022}, }

2021

-

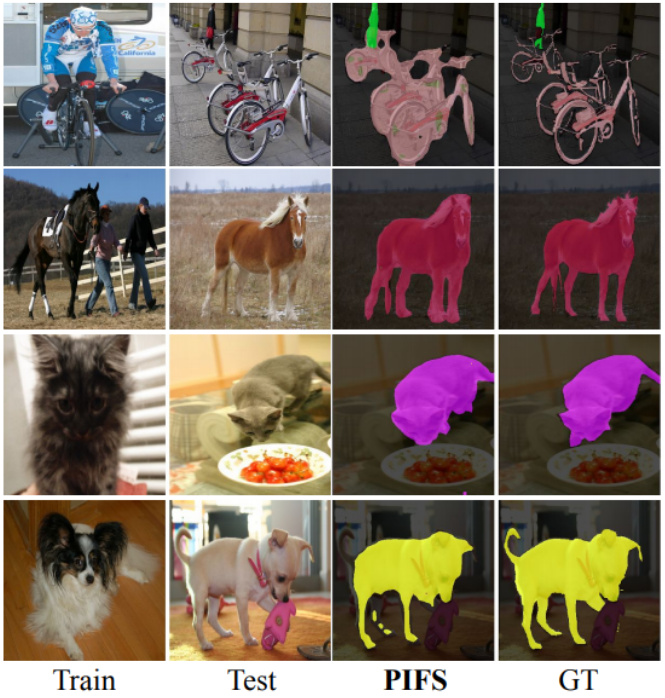

Prototype-based Incremental Few-Shot Semantic SegmentationFabio Cermelli , Massimiliano Mancini, Yongqin Xian , and 2 more authorsIn British Machine Vision Conference , Jun 2021

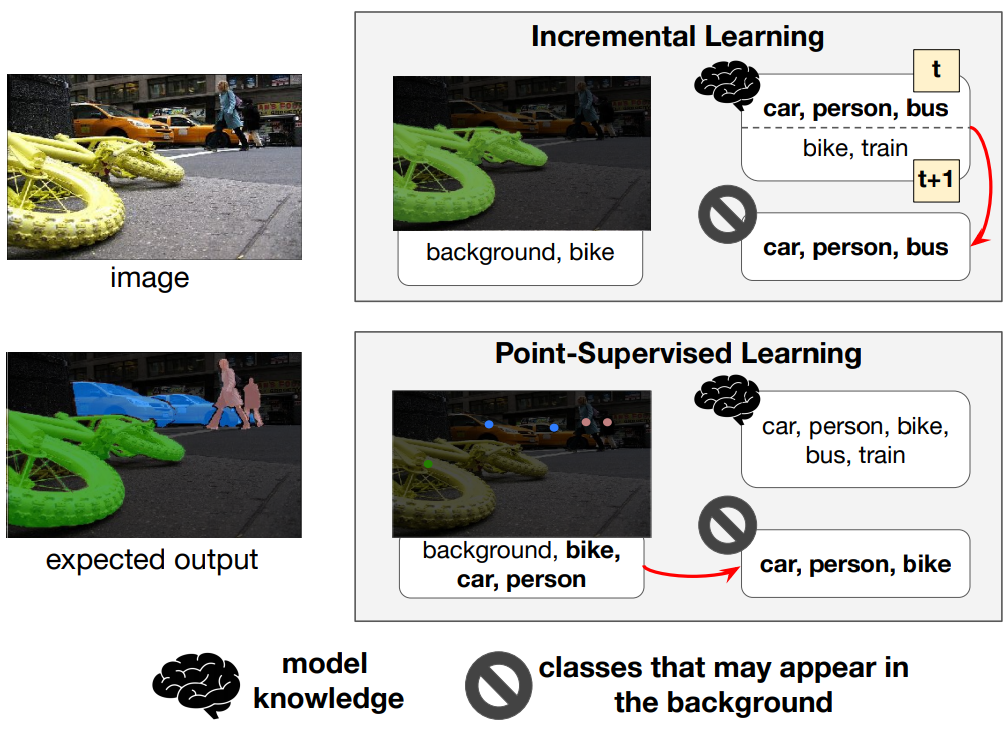

Prototype-based Incremental Few-Shot Semantic SegmentationFabio Cermelli , Massimiliano Mancini, Yongqin Xian , and 2 more authorsIn British Machine Vision Conference , Jun 2021Semantic segmentation models have two fundamental weaknesses: i) they require large training sets with costly pixel-level annotations, and ii) they have a static output space, constrained to the classes of the training set. Toward addressing both problems, we introduce a new task, Incremental Few-Shot Segmentation (iFSS). The goal of iFSS is to extend a pretrained segmentation model with new classes from few annotated images and without access to old training data. To overcome the limitations of existing models iniFSS, we propose Prototype-based Incremental Few-Shot Segmentation (PIFS) that couples prototype learning and knowledge distillation. PIFS exploits prototypes to initialize the classifiers of new classes, fine-tuning the network to refine its features representation. We design a prototype-based distillation loss on the scores of both old and new class prototypes to avoid overfitting and forgetting, and batch-renormalization to cope with non-i.i.d.few-shot data. We create an extensive benchmark for iFSS showing that PIFS outperforms several few-shot and incremental learning methods in all scenarios.

@inproceedings{cermelli2021prototype, title = {Prototype-based Incremental Few-Shot Semantic Segmentation}, author = {Cermelli, Fabio and Mancini, Massimiliano and Xian, Yongqin and Akata, Zeynep and Caputo, Barbara}, booktitle = {British Machine Vision Conference}, year = {2021}, } -

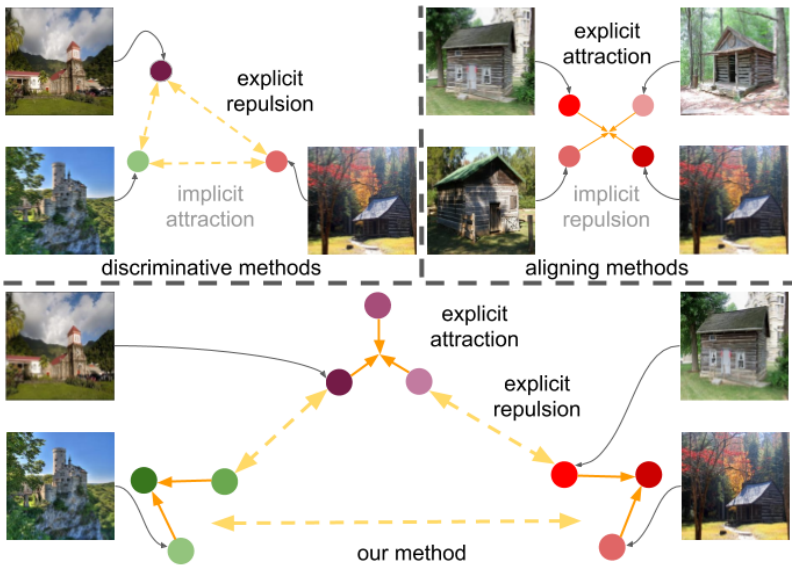

Concurrent Discrimination and Alignment for Self-Supervised Feature LearningAnjan Dutta , Massimiliano Mancini, and Zeynep AkataIn IEEE/CVF International Conference on Computer Vision (ICCV) Workshops , Jun 2021

Concurrent Discrimination and Alignment for Self-Supervised Feature LearningAnjan Dutta , Massimiliano Mancini, and Zeynep AkataIn IEEE/CVF International Conference on Computer Vision (ICCV) Workshops , Jun 2021Existing self-supervised learning methods learn representation by means of pretext tasks which are either (1) discriminating that explicitly specify which features should be separated or (2) aligning that precisely indicate which features should be closed together, but ignore the fact how to jointly and principally define which features to be repelled and which ones to be attracted. In this work, we combine the positive aspects of the discriminating and aligning methods, and design a hybrid method that addresses the above issue. Our method explicitly specifies the repulsion and attraction mechanism respectively by discriminative predictive task and concurrently maximizing mutual information between paired views sharing redundant information. We qualitatively and quantitatively show that our proposed model learns better features that are more effective for the diverse downstream tasks ranging from classification to semantic segmentation. Our experiments on nine established benchmarks show that the proposed model consistently outperforms the existing state-of-the-art results of self-supervised and transfer learning protocol.

@inproceedings{dutta2021concurrent, title = {Concurrent Discrimination and Alignment for Self-Supervised Feature Learning}, author = {Dutta, Anjan and Mancini, Massimiliano and Akata, Zeynep}, booktitle = {IEEE/CVF International Conference on Computer Vision (ICCV) Workshops}, pages = {2189--2198}, year = {2021}, } -

Open World Compositional Zero-Shot LearningMassimiliano Mancini, Muhammad Ferjad Naeem , Yongqin Xian , and 1 more authorIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , Jun 2021

Open World Compositional Zero-Shot LearningMassimiliano Mancini, Muhammad Ferjad Naeem , Yongqin Xian , and 1 more authorIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , Jun 2021Compositional Zero-Shot learning (CZSL) requires to recognize state-object compositions unseen during training. In this work, instead of assuming prior knowledge about the unseen compositions, we operate in the open world setting, where the search space includes a large number of unseen compositions some of which might be unfeasible. In this setting, we start from the cosine similarity between visual features and compositional embeddings. After estimating the feasibility score of each composition, we use these scores to either directly mask the output space or as a margin for the cosine similarity between visual features and compositional embeddings during training. Our experiments on two standard CZSL benchmarks show that all the methods suffer severe performance degradation when applied in the open world setting. While our simple CZSL model achieves state-of-the-art performances in the closed world scenario, our feasibility scores boost the performance of our approach in the open world setting, clearly outperforming the previous state of the art.

@inproceedings{mancini2021open, title = {Open World Compositional Zero-Shot Learning}, author = {Mancini, Massimiliano and Naeem, Muhammad Ferjad and Xian, Yongqin and Akata, Zeynep}, booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, year = {2021}, } -

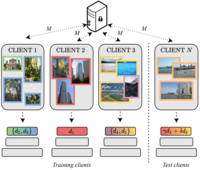

Cluster-driven Graph Federated Learning over Multiple DomainsDebora Caldarola , Massimiliano Mancini, Fabio Galasso , and 3 more authorsIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops , Jun 2021

Cluster-driven Graph Federated Learning over Multiple DomainsDebora Caldarola , Massimiliano Mancini, Fabio Galasso , and 3 more authorsIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops , Jun 2021Federated Learning (FL) deals with learning a central model (ie the server) in privacy-constrained scenarios, where data are stored on multiple devices (ie the clients). The central model has no direct access to the data, but only to the updates of the parameters computed locally by each client. This raises a problem, known as statistical heterogeneity, because the clients may have different data distributions (ie domains). This is only partly alleviated by clustering the clients. Clustering may reduce heterogeneity by identifying the domains, but it deprives each cluster model of the data and supervision of others. Here we propose a novel Cluster-driven Graph Federated Learning (FedCG). In FedCG, clustering serves to address statistical heterogeneity, while Graph Convolutional Networks (GCNs) enable sharing knowledge across them. FedCG: i) identifies the domains via an FL-compliant clustering and instantiates domain-specific modules (residual branches) for each domain; ii) connects the domain-specific modules through a GCN at training to learn the interactions among domains and share knowledge; and iii) learns to cluster unsupervised via teacher-student classifier-training iterations and to address novel unseen test domains via their domain soft-assignment scores. Thanks to the unique interplay of GCN over clusters, FedCG achieves the state-of-the-art on multiple FL benchmarks.

@inproceedings{caldarola2021cluster, author = {Caldarola, Debora and Mancini, Massimiliano and Galasso, Fabio and Ciccone, Marco and Rodolà, Emanuele and Caputo, Barbara}, title = {Cluster-driven Graph Federated Learning over Multiple Domains}, booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops}, year = {2021}, } -

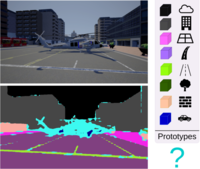

Detecting Anomalies in Semantic Segmentation with PrototypesDario Fontanel , Fabio Cermelli , Massimiliano Mancini, and 1 more authorIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops , Jun 2021

Detecting Anomalies in Semantic Segmentation with PrototypesDario Fontanel , Fabio Cermelli , Massimiliano Mancini, and 1 more authorIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops , Jun 2021Traditional semantic segmentation methods can recognize at test time only the classes that are present in the training set. This is a significant limitation, especially for semantic segmentation algorithms mounted on intelligent autonomous systems, deployed in realistic settings. Regardless of how many classes the system has seen at training time, it is inevitable that unexpected, unknown objects will appear at test time. The failure in identifying such anomalies may lead to incorrect, even dangerous behaviors of the autonomous agent equipped with such segmentation model when deployed in the real world. Current state of the art of anomaly segmentation uses generative models, exploiting their incapability to reconstruct patterns unseen during training. However, training these models is expensive, and their generated artifacts may create false anomalies. In this paper we take a different route and we propose to address anomaly segmentation through prototype learning. Our intuition is that anomalous pixels are those that are dissimilar to all class prototypes known by the model. We extract class prototypes from the training data in a lightweight manner using a cosine similarity-based classifier. Experiments on StreetHazards show that our approach achieves the new state of the art, with a significant margin over previous works, despite the reduced computational overhead.

@inproceedings{fontanel2021detecting, author = {Fontanel, Dario and Cermelli, Fabio and Mancini, Massimiliano and Caputo, Barbara}, title = {Detecting Anomalies in Semantic Segmentation with Prototypes}, booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops}, year = {2021}, } -

A Closer Look at Self-training for Zero-Label Semantic SegmentationGiuseppe Pastore , Fabio Cermelli , Yongqin Xian , and 3 more authorsIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops , Jun 2021

A Closer Look at Self-training for Zero-Label Semantic SegmentationGiuseppe Pastore , Fabio Cermelli , Yongqin Xian , and 3 more authorsIn IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops , Jun 2021Being able to segment unseen classes not observed during training is an important technical challenge in deep learning, because of its potential to reduce the expensive annotation required for semantic segmentation. Prior zero-label semantic segmentation works approach this task by learning visual-semantic embeddings or generative models. However, they are prone to overfitting on the seen classes because there is no training signal for them. In this paper, we study the challenging generalized zero-label semantic segmentation task where the model has to segment both seen and unseen classes at test time. We assume that pixels of unseen classes could be present in the training images but without being annotated. Our idea is to capture the latent information on unseen classes by supervising the model with self-produced pseudo-labels for unlabeled pixels. We propose a consistency regularizer to filter out noisy pseudo-labels by taking the intersections of the pseudo-labels generated from different augmentations of the same image. Our framework generates pseudo-labels and then retrain the model with human-annotated and pseudo-labelled data. This procedure is repeated for several iterations. As a result, our approach achieves the new state-of-the-art on PascalVOC12 and COCO-stuff datasets in the challenging generalized zero-label semantic segmentation setting, surpassing other existing methods addressing this task with more complex strategies.

@inproceedings{pastore2021closer, author = {Pastore, Giuseppe and Cermelli, Fabio and Xian, Yongqin and Mancini, Massimiliano and Akata, Zeynep and Caputo, Barbara}, title = {A Closer Look at Self-training for Zero-Label Semantic Segmentation}, booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops}, year = {2021}, } -

Shape Consistent 2D Keypoint Estimation under Domain ShiftLevi O. Vasconcelos , Massimiliano Mancini, Davide Boscaini , and 3 more authorsIn 2020 25th International Conference on Pattern Recognition (ICPR) , Jun 2021